[ハンズオン] EKS + Karpenter のデプロイ・トラブルシューティング演習

Contents

概要

対象読者

- 今回は、Amazon EKS + Karpenter のハンズオンをブログにまとめました。対象読者は、EKS の初学者、Karpenter の導入方法を学びたい方、コントロールプレーンをプライベートエンドポイントで使用したい方にお読みいただきたいと思います。また、私がTry & Error を行いながら検証した結果を踏まえ、一部エラー事例とトラブルシューティングの内容も記載しております。

- EKS + Karpenter の構築手順やハンズオンは情報が少なく、構築ステップも多いため、初学者には難易度が高くなります。本ブログでは、一通りの手順を整理してハンズオン形式にまとめることで、EKS + Karpenter 学習のハードルを下げることを目的としています。

- 今回のハンズオンは、Karpenter の公式サイト (https://karpenter.sh/docs/getting-started/getting-started-with-karpenter/) を参考にしています。公式サイトとの大きな違いは、Docker イメージの作成から行うこと、既存のVPC にEKS を構築すること、コントロールプレーンをプライベートエンドポイント構成とするような実際の案件に近づける工夫をしています。

- 今回のハンズオンで使用するEKSクラスタおよび関連するリソース、NAT Gatewayなどは、起動しているだけでコストが発生します。ハンズオンの終了後に、リソースの削除を忘れずにお願いします。

EKS (Amazon Elastic Kubernetes Service) について

- Kubernetesは、クラスター全体を管理するコントロールプレーンと、コンテナが実際に動作するデータプレーン(ワーカーノードの集まり)で構成されます。EKSを利用すると、最も複雑なコントロールプレーンの構築・運用・スケーリングをAWS のマネージドサービスに任せることができます。今回のハンズオンでは、このデータプレーンを構成するノードの管理を、次に説明するKarpenterを使って自動化します。

- コントロールプレーンを管理するKubernetes API へのアクセス方法は、パブリックエンドポイント、パブリックとプライベートを併用するエンドポイント、プライベートエンドポイントの3種類があります。今回は、より実運用に近いプライベートエンドポイントを利用したハンズオンとなります。

Karpenter について

- Karpenterは、EKSのデータプレーンを構成するワーカーノードを、自動で管理してくれるオープンソースの高性能なオートスケーラーです。

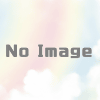

- 次の図が示すように、Karpenterは従来のオートスケーラーとは異なるアプローチで動作します。

- スケジュールされていないPodをトリガーに起動:既存のノードにリソースの空きがなく、スケジュールできないPod(Unschedulable Pods) が発生すると、Karpenterがそれを即座に検知します。そして、そのPodが必要とするリソース(CPU、メモリなど)に最適なスペックを持つEC2インスタンスをジャストインタイムで起動します。

- ノードグループ管理からの解放:事前にEC2のインスタンスタイプを細かく定義したノードグループを複数用意する必要がありません。Karpenterが状況に応じて最適なインスタンスを自動で選択してくれます。

- コスト効率の最大化: ワークロードがなくなればノードを速やかに終了したり、より安価なスポットインスタンスを積極的に活用したりすることで、運用コストを大幅に削減できます。

- この様に、EKSとKarpenterを組み合わせることで、運用負荷を下げながら、コスト効率の高いコンテナ実行基盤を構築することができます。

ハンズオン1:VPC および関連リソースの作成

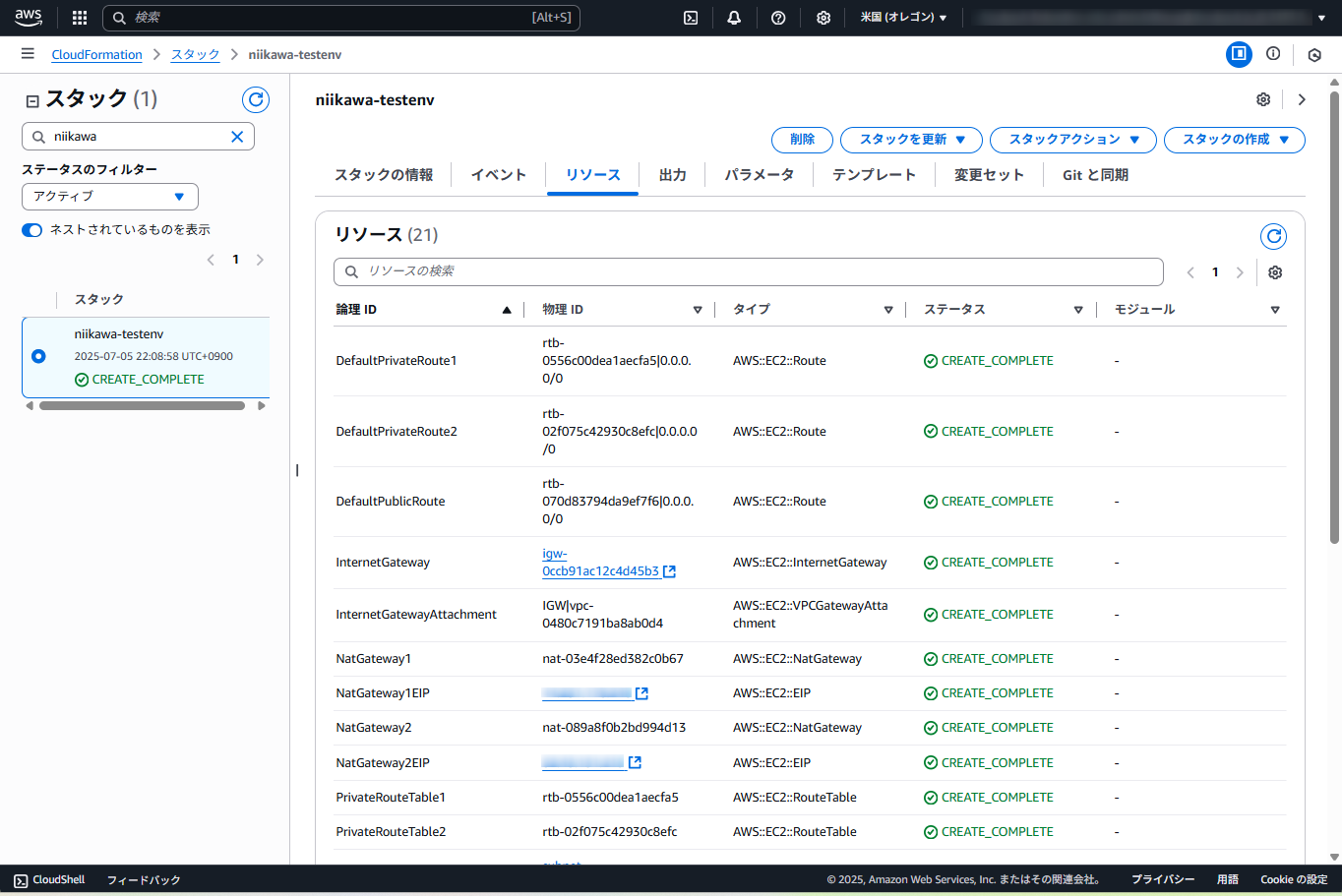

CloudFormation を使ったVPC および関連リソースの作成

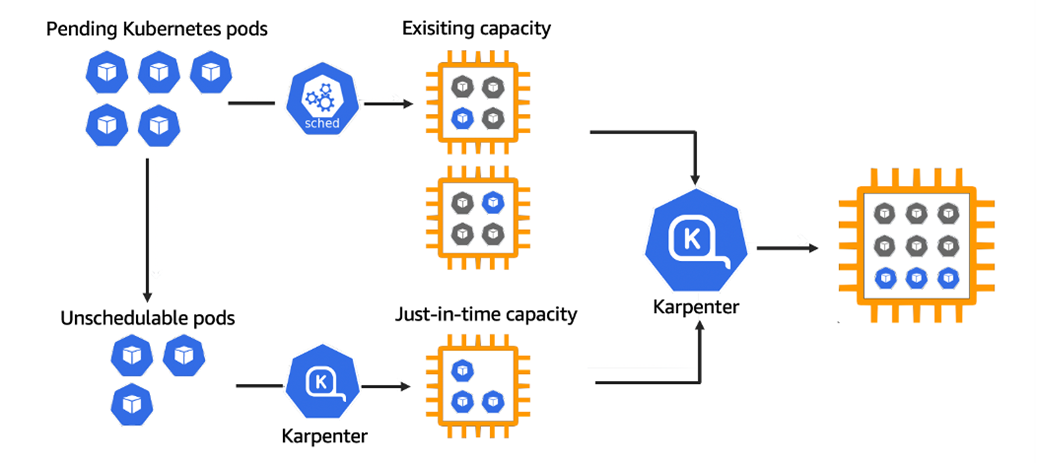

- CloudFormation を使って、VPC およびVPC関連のリソースを作成します。AWSマネジメントコンソールからCloudFormationを選択します。

- 画面右上の「スタックの作成」から「新しいリソースを使用 (標準)」を選択します。

- 「既存のテンプレートを選択」を選択します。「テンプレートの指定」から「テンプレートファイルのアップロード」を選択、「ファイルの選択」を選択し、CFnテンプレートファイルを選択します。

- 今回のテンプレートファイルは、以下の YAMLを使用します。この YAMLは、こちらの AWSドキュメントに記載されているAWS CloudFormation VPC テンプレートをカスタマイズしたものです。

Description: This template deploys a VPC, with a pair of public and private subnets spread

across two Availability Zones. It deploys an internet gateway, with a default

route on the public subnets. It deploys a pair of NAT gateways (one in each AZ),

and default routes for them in the private subnets.

Parameters:

EnvironmentName:

Description: An environment name that is prefixed to resource names

Type: String

VpcCIDR:

Description: Please enter the IP range (CIDR notation) for this VPC

Type: String

Default: 10.192.0.0/16

PublicSubnet1CIDR:

Description: Please enter the IP range (CIDR notation) for the public subnet in the first Availability Zone

Type: String

Default: 10.192.10.0/24

PublicSubnet2CIDR:

Description: Please enter the IP range (CIDR notation) for the public subnet in the second Availability Zone

Type: String

Default: 10.192.11.0/24

PrivateSubnet1CIDR:

Description: Please enter the IP range (CIDR notation) for the private subnet in the first Availability Zone

Type: String

Default: 10.192.20.0/24

PrivateSubnet2CIDR:

Description: Please enter the IP range (CIDR notation) for the private subnet in the second Availability Zone

Type: String

Default: 10.192.21.0/24

Resources:

VPC:

Type: AWS::EC2::VPC

Properties:

CidrBlock: !Ref VpcCIDR

EnableDnsSupport: true

EnableDnsHostnames: true

Tags:

- Key: Name

Value: !Sub ${EnvironmentName}-vpc

InternetGateway:

Type: AWS::EC2::InternetGateway

Properties:

Tags:

- Key: Name

Value: !Sub ${EnvironmentName}-igw

InternetGatewayAttachment:

Type: AWS::EC2::VPCGatewayAttachment

Properties:

InternetGatewayId: !Ref InternetGateway

VpcId: !Ref VPC

PublicSubnet1:

Type: AWS::EC2::Subnet

Properties:

VpcId: !Ref VPC

AvailabilityZone: !Select [ 0, !GetAZs '' ]

CidrBlock: !Ref PublicSubnet1CIDR

MapPublicIpOnLaunch: true

Tags:

- Key: Name

Value: !Sub ${EnvironmentName}-sntpub1

PublicSubnet2:

Type: AWS::EC2::Subnet

Properties:

VpcId: !Ref VPC

AvailabilityZone: !Select [ 1, !GetAZs '' ]

CidrBlock: !Ref PublicSubnet2CIDR

MapPublicIpOnLaunch: true

Tags:

- Key: Name

Value: !Sub ${EnvironmentName}-sntpub2

PrivateSubnet1:

Type: AWS::EC2::Subnet

Properties:

VpcId: !Ref VPC

AvailabilityZone: !Select [ 0, !GetAZs '' ]

CidrBlock: !Ref PrivateSubnet1CIDR

MapPublicIpOnLaunch: false

Tags:

- Key: Name

Value: !Sub ${EnvironmentName}-sntpri1

PrivateSubnet2:

Type: AWS::EC2::Subnet

Properties:

VpcId: !Ref VPC

AvailabilityZone: !Select [ 1, !GetAZs '' ]

CidrBlock: !Ref PrivateSubnet2CIDR

MapPublicIpOnLaunch: false

Tags:

- Key: Name

Value: !Sub ${EnvironmentName}-sntpri2

NatGateway1EIP:

Type: AWS::EC2::EIP

DependsOn: InternetGatewayAttachment

Properties:

Domain: vpc

NatGateway2EIP:

Type: AWS::EC2::EIP

DependsOn: InternetGatewayAttachment

Properties:

Domain: vpc

NatGateway1:

Type: AWS::EC2::NatGateway

Properties:

AllocationId: !GetAtt NatGateway1EIP.AllocationId

SubnetId: !Ref PublicSubnet1

NatGateway2:

Type: AWS::EC2::NatGateway

Properties:

AllocationId: !GetAtt NatGateway2EIP.AllocationId

SubnetId: !Ref PublicSubnet2

PublicRouteTable:

Type: AWS::EC2::RouteTable

Properties:

VpcId: !Ref VPC

Tags:

- Key: Name

Value: !Sub ${EnvironmentName}-rtbpub

DefaultPublicRoute:

Type: AWS::EC2::Route

DependsOn: InternetGatewayAttachment

Properties:

RouteTableId: !Ref PublicRouteTable

DestinationCidrBlock: 0.0.0.0/0

GatewayId: !Ref InternetGateway

PublicSubnet1RouteTableAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

RouteTableId: !Ref PublicRouteTable

SubnetId: !Ref PublicSubnet1

PublicSubnet2RouteTableAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

RouteTableId: !Ref PublicRouteTable

SubnetId: !Ref PublicSubnet2

PrivateRouteTable1:

Type: AWS::EC2::RouteTable

Properties:

VpcId: !Ref VPC

Tags:

- Key: Name

Value: !Sub ${EnvironmentName}-rtbpri1

DefaultPrivateRoute1:

Type: AWS::EC2::Route

Properties:

RouteTableId: !Ref PrivateRouteTable1

DestinationCidrBlock: 0.0.0.0/0

NatGatewayId: !Ref NatGateway1

PrivateSubnet1RouteTableAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

RouteTableId: !Ref PrivateRouteTable1

SubnetId: !Ref PrivateSubnet1

PrivateRouteTable2:

Type: AWS::EC2::RouteTable

Properties:

VpcId: !Ref VPC

Tags:

- Key: Name

Value: !Sub ${EnvironmentName}-rtbpri2

DefaultPrivateRoute2:

Type: AWS::EC2::Route

Properties:

RouteTableId: !Ref PrivateRouteTable2

DestinationCidrBlock: 0.0.0.0/0

NatGatewayId: !Ref NatGateway2

PrivateSubnet2RouteTableAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

RouteTableId: !Ref PrivateRouteTable2

SubnetId: !Ref PrivateSubnet2

Outputs:

VPC:

Description: A reference to the created VPC

Value: !Ref VPC

PublicSubnets:

Description: A list of the public subnets

Value: !Join [ ",", [ !Ref PublicSubnet1, !Ref PublicSubnet2 ]]

PrivateSubnets:

Description: A list of the private subnets

Value: !Join [ ",", [ !Ref PrivateSubnet1, !Ref PrivateSubnet2 ]]

PublicSubnet1:

Description: A reference to the public subnet in the 1st Availability Zone

Value: !Ref PublicSubnet1

PublicSubnet2:

Description: A reference to the public subnet in the 2nd Availability Zone

Value: !Ref PublicSubnet2

PrivateSubnet1:

Description: A reference to the private subnet in the 1st Availability Zone

Value: !Ref PrivateSubnet1

PrivateSubnet2:

Description: A reference to the private subnet in the 2nd Availability Zone

Value: !Ref PrivateSubnet2

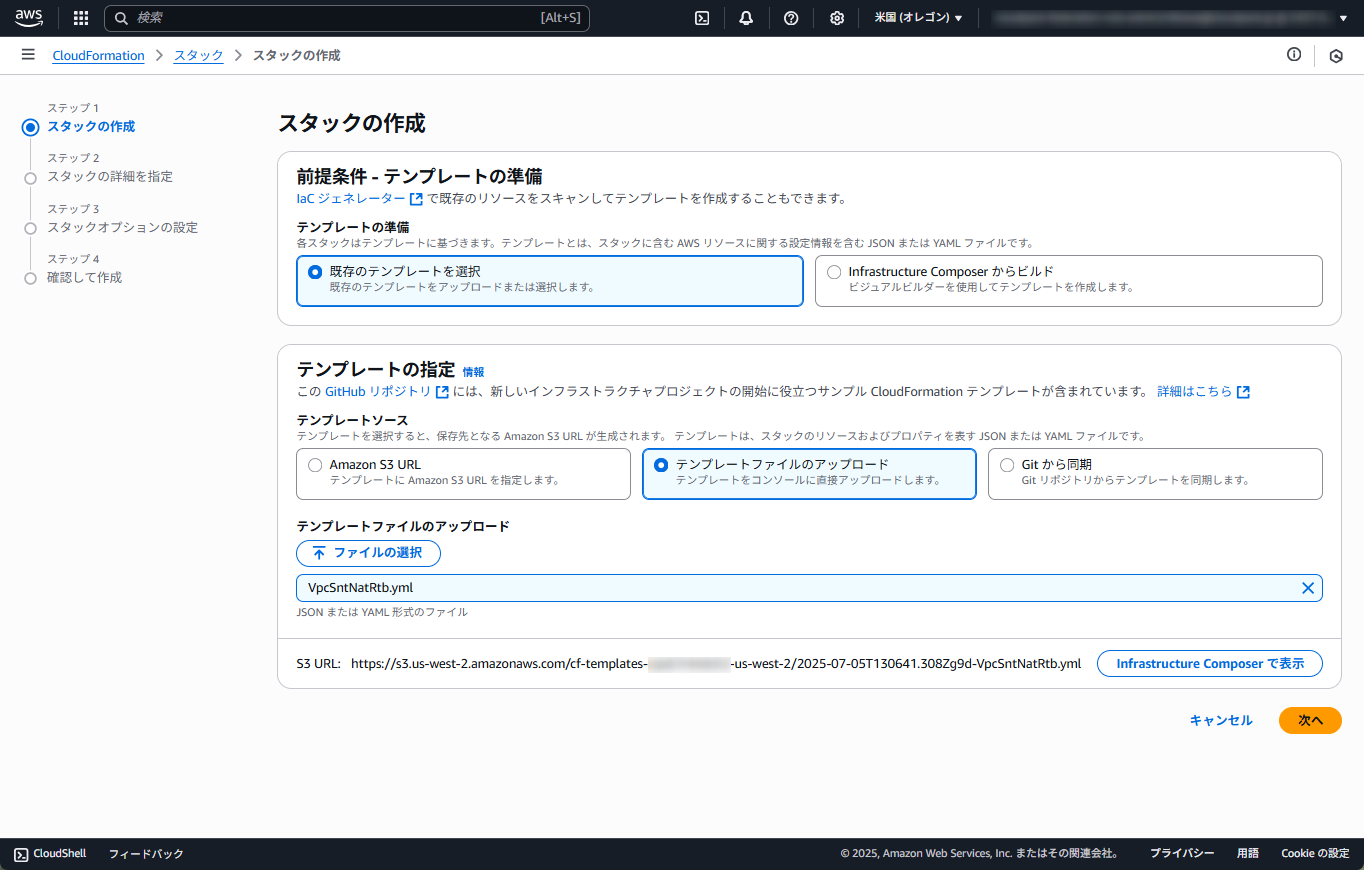

- スタックの名前を入力します。

- パラメータを指定します。EnvironmentName に任意の名前を指定し、その他のパラメータ (VPC,Subnet のCIDR) は変更しなくても構いません。

- スタックオプションは特に指定しません。

- レビューを確認し、「送信」を押します。

- スタック一覧より、該当のスタックの「ステータス」が「CREATE_COMPLETE」と表示されたことを確認します。スタックの実行完了には、しばらく時間が掛かります。

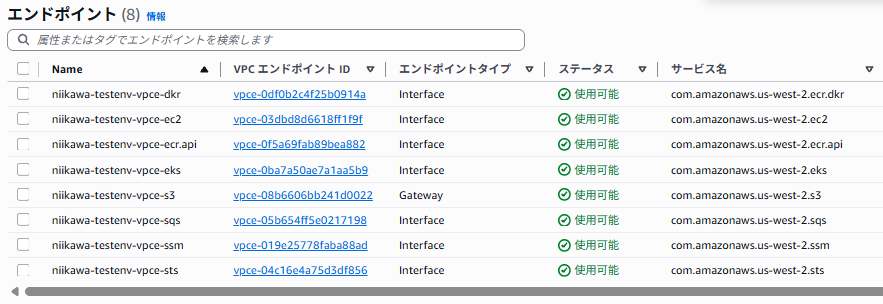

VPC エンドポイントの作成について

- EKSプライベートエンドポイントとKarpenter利用時に最低限必要なVPCエンドポイントは、以下となります。しかし、EKS クラスタの作成時にエラーが発生するため、VPC エンドポイントの作成は後の工程(ハンズオン7)で行います。

- com.amazonaws.<region>.ec2

- com.amazonaws.<region>.ecr.api

- com.amazonaws.<region>.ecr.dkr

- com.amazonaws.<region>.s3 – For pulling container images

- com.amazonaws.<region>.sts – For IAM roles for service accounts

- com.amazonaws.<region>.ssm – For resolving default AMIs

- com.amazonaws.<region>.sqs – For accessing SQS if using interruption handling

- com.amazonaws.<region>.eks – For Karpenter to discover the cluster endpoint

ハンズオン2:Cloudshell を使ってDocker イメージを作成

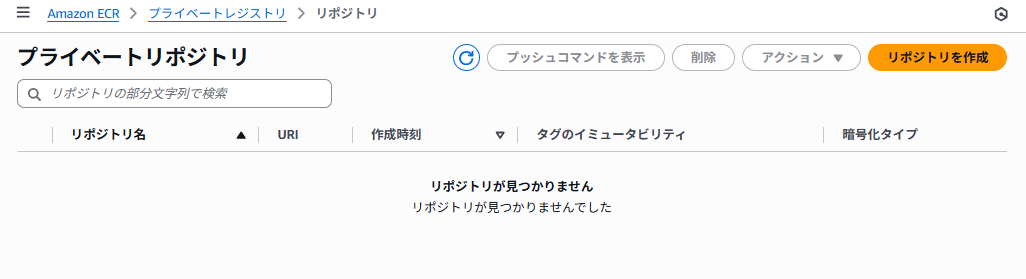

ECR のリポジトリ作成

- AWSマネジメントコンソールからECR(Elastic Container Registry) を選択します。続いて、プライベートリポジトリを選択します。

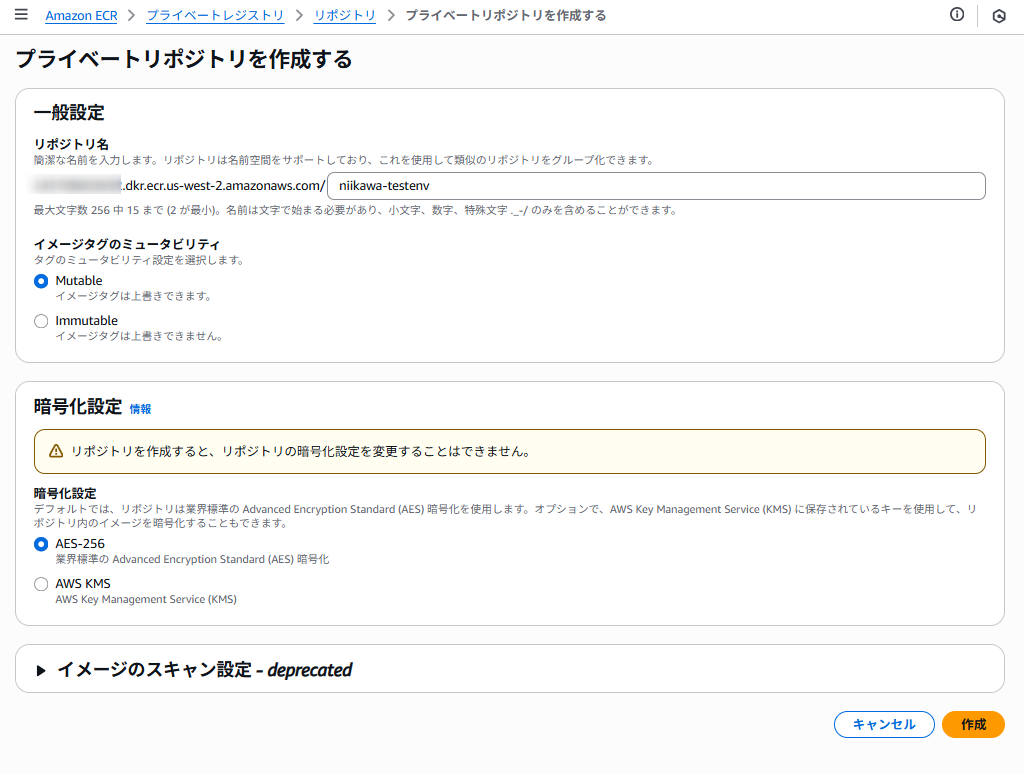

- 「リポジトリを作成」を選択します。

- リポジトリ名およびその他の設定を行い、「作成」を押します。

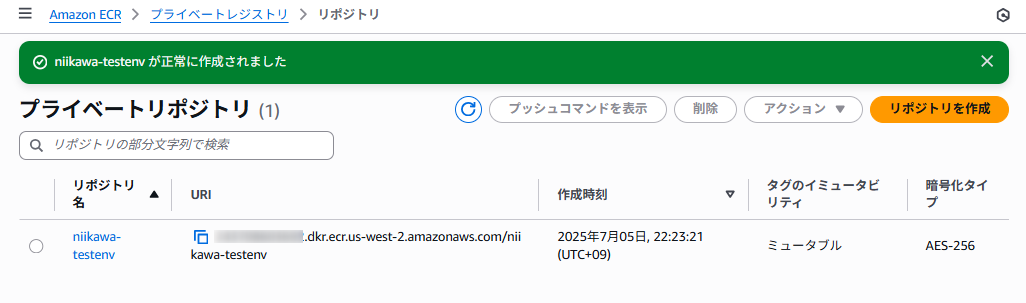

- プライベートリポジトリが作成されました。

Dockerイメージを作成してリポジトリにプッシュ

- Cloudshell のターミナルから下記コマンドを実行します。

mkdir ecs_hello

cd ecs_hello/

mkdir conf- ecs_hello/ 配下に、Dockerfile ファイルを作成します。

- Dockerfile ファイルは、コンテナイメージを管理するための定義ファイルです。

- ベースとして使用する既存のイメージの指定(→ FROM コマンド)、イメージの作成プロセス時に実行されるコマンド(→ ADD コマンド)、コンテナイメージの新しいインスタンスが展開されるときに実行されるコマンド(→ RUN コマンド)などの定義が含まれます。

- 今回使用するDockerfile ファイルは、下記となります。

FROM nginx:latest

ADD conf/nginx.conf /etc/nginx/

RUN echo "Hello EKS!" > /usr/share/nginx/html/index.html- ecs_hello/conf/ 配下に、nginx.conf を作成します。

- 今回使用する nginx.conf は、下記となります。

- デフォルトから変更している箇所は、worker_processes を1にしています。autoindex on を指定しています。

- 公開ディレクトリは、/usr/share/nginx/html です。

# For more information on configuration, see:

# * Official English Documentation: http://nginx.org/en/docs/

# * Official Russian Documentation: http://nginx.org/ru/docs/

user nginx;

worker_processes 1;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

# Load dynamic modules. See /usr/share/doc/nginx/README.dynamic.

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 4096;

include /etc/nginx/mime.types;

default_type application/octet-stream;

# Load modular configuration files from the /etc/nginx/conf.d directory.

# See http://nginx.org/en/docs/ngx_core_module.html#include

# for more information.

include /etc/nginx/conf.d/*.conf;

server {

listen 80;

listen [::]:80;

server_name _;

root /usr/share/nginx/html;

# Load configuration files for the default server block.

include /etc/nginx/default.d/*.conf;

location / {

autoindex on;

}

error_page 404 /404.html;

location = /404.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

}

- Docker イメージの作成、プッシュを行うコマンドを確認するため、ECR のコンソールに戻り、作成したECR リポジトリを選択します。

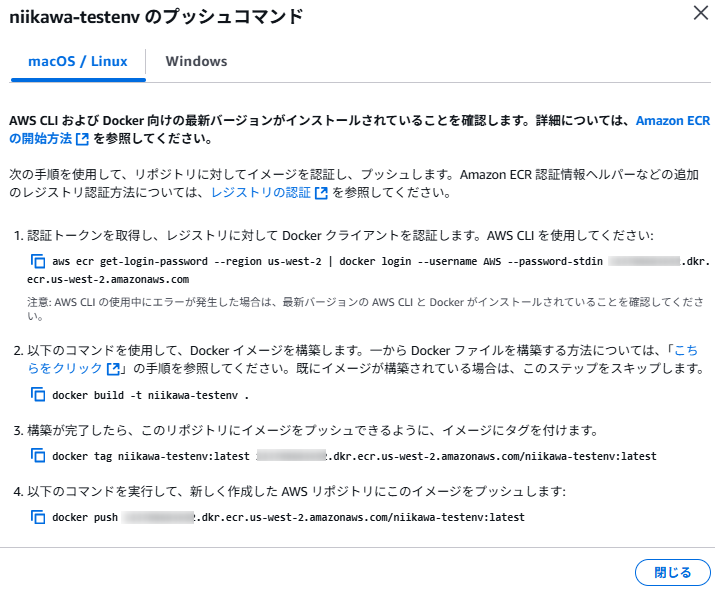

- 「プッシュコマンドを表示」ボタンを押します。下記の画面が表示されます。

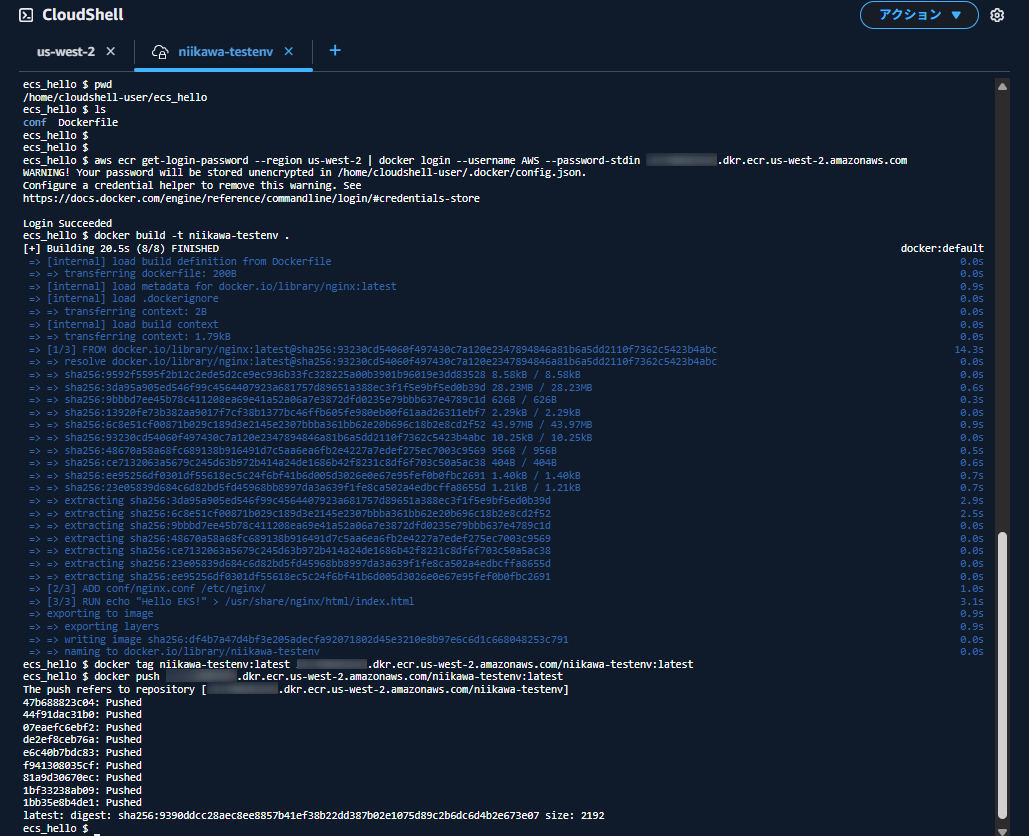

- CloudShell のターミナルに戻り、Docker イメージの作成、ECR リポジトリへプッシュを行います。先ほどECR リポジトリの画面で確認したコマンド 1~4を使用します。(以下コマンドの111111111111 にはアカウント番号が入ります。リージョンはお使いの環境に合わせて、修正ください。)

ecs_hello $ aws ecr get-login-password --region us-west-2 | docker login --username AWS --password-stdin 111111111111.dkr.ecr.us-west-2.amazonaws.com

WARNING! Your password will be stored unencrypted in /home/cloudshell-user/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

ecs_hello $ docker build -t niikawa-testenv .

[+] Building 20.5s (8/8) FINISHED

=> [internal] load build definition from Dockerfile

=> => transferring dockerfile: 200B

=> [internal] load metadata for docker.io/library/nginx:latest

=> [internal] load .dockerignore

=> => transferring context: 2B

=> [internal] load build context

=> => transferring context: 1.79kB

=> [1/3] FROM docker.io/library/nginx:latest@sha256:93230cd54060f497430c7a120e2347894846a81b6a5dd2110f7362c5423b4abc

=> => resolve docker.io/library/nginx:latest@sha256:93230cd54060f497430c7a120e2347894846a81b6a5dd2110f7362c5423b4abc

=> => sha256:9592f5595f2b12c2ede5d2ce9ec936b33fc328225a00b3901b96019e3dd83528 8.58kB / 8.58kB

=> => sha256:3da95a905ed546f99c4564407923a681757d89651a388ec3f1f5e9bf5ed0b39d 28.23MB / 28.23MB

=> => sha256:9bbbd7ee45b78c411208ea69e41a52a06a7e3872dfd0235e79bbb637e4789c1d 626B / 626B

=> => sha256:13920fe73b382aa9017f7cf38b1377bc46ffb605fe980eb00f61aad26311ebf7 2.29kB / 2.29kB

=> => sha256:6c8e51cf00871b029c189d3e2145e2307bbba361bb62e20b696c18b2e8cd2f52 43.97MB / 43.97MB

=> => sha256:93230cd54060f497430c7a120e2347894846a81b6a5dd2110f7362c5423b4abc 10.25kB / 10.25kB

=> => sha256:48670a58a68fc689138b916491d7c5aa6ea6fb2e4227a7edef275ec7003c9569 956B / 956B

=> => sha256:ce7132063a5679c245d63b972b414a24de1686b42f8231c8df6f703c50a5ac38 404B / 404B

=> => sha256:ee95256df0301df55618ec5c24f6bf41b6d005d3026e0e67e95fef0b0fbc2691 1.40kB / 1.40kB

=> => sha256:23e05839d684c6d82bd5fd45968bb8997da3a639f1fe8ca502a4edbcffa8655d 1.21kB / 1.21kB

=> => extracting sha256:3da95a905ed546f99c4564407923a681757d89651a388ec3f1f5e9bf5ed0b39d

=> => extracting sha256:6c8e51cf00871b029c189d3e2145e2307bbba361bb62e20b696c18b2e8cd2f52

=> => extracting sha256:9bbbd7ee45b78c411208ea69e41a52a06a7e3872dfd0235e79bbb637e4789c1d

=> => extracting sha256:48670a58a68fc689138b916491d7c5aa6ea6fb2e4227a7edef275ec7003c9569

=> => extracting sha256:ce7132063a5679c245d63b972b414a24de1686b42f8231c8df6f703c50a5ac38

=> => extracting sha256:23e05839d684c6d82bd5fd45968bb8997da3a639f1fe8ca502a4edbcffa8655d

=> => extracting sha256:ee95256df0301df55618ec5c24f6bf41b6d005d3026e0e67e95fef0b0fbc2691

=> [2/3] ADD conf/nginx.conf /etc/nginx/

=> [3/3] RUN echo "Hello EKS!" > /usr/share/nginx/html/index.html

=> exporting to image

=> => exporting layers

=> => writing image sha256:df4b7a47d4bf3e205adecfa92071802d45e3210e8b97e6c6d1c668048253c791

=> => naming to docker.io/library/niikawa-testenv

ecs_hello $ docker tag niikawa-testenv:latest 111111111111.dkr.ecr.us-west-2.amazonaws.com/niikawa-testenv:latest

ecs_hello $ docker push 111111111111.dkr.ecr.us-west-2.amazonaws.com/niikawa-testenv:latest

The push refers to repository [111111111111.dkr.ecr.us-west-2.amazonaws.com/niikawa-testenv]

47b688823c04: Pushed

44f91dac31b0: Pushed

07eaefc6ebf2: Pushed

de2ef8ceb76a: Pushed

e6c40b7bdc83: Pushed

f941308035cf: Pushed

81a9d30670ec: Pushed

1bf33238ab09: Pushed

1bb35e8b4de1: Pushed

latest: digest: sha256:9390ddcc28aec8ee8857b41ef38b22dd387b02e1075d89c2b6dc6d4b2e673e07 size: 2192

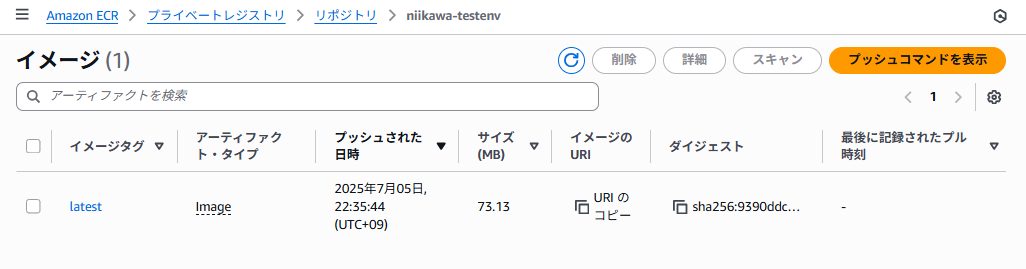

- ECR リポジトリに、イメージがプッシュがされました。ダイジェストが docker pushコマンドの結果と一致します。

ハンズオン3:Cloudshell にEKS 管理ツール導入

eksctl, kubectl, helm のインストール

- Cloudshell 環境に、eksctl, kubectl, helm をインストールします。

- CloudShellでは、ホームディレクトリ($HOME)以外の場所に保存したファイルは、セッションが終了すると削除されます。構築中にセッションが終了した場合は、再度セッションを開始した後に管理ツールの再インストールをお願いします。

~ $ which eksctl

/usr/bin/which: no eksctl in (/home/cloudshell-user/.local/bin:/home/cloudshell-user/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/lib/nodejs20/lib/node_modules/aws-cdk/bin)

~ $ curl -L "https://github.com/weaveworks/eksctl/releases/latest/download/eksctl_$(uname -s)_amd64.tar.gz" | tar xz -C /tmp

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

100 33.3M 100 33.3M 0 0 14.3M 0 0:00:02 0:00:02 --:--:-- 21.7M

~ $ sudo mv /tmp/eksctl /usr/local/bin

~ $ which eksctl

/usr/local/bin/eksctl

~ $ eksctl version

0.210.0

~ $ which kubectl

/usr/local/bin/kubectl

~ $

~ $ kubectl version

Client Version: v1.32.0-eks-aeac579

Kustomize Version: v5.5.0

The connection to the server localhost:8080 was refused - did you specify the right host or port?

~ $

~ $ kubectl version --client

Client Version: v1.32.0-eks-aeac579

Kustomize Version: v5.5.0

~ $ which helm

/usr/bin/which: no helm in (/home/cloudshell-user/.local/bin:/home/cloudshell-user/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/lib/nodejs20/lib/node_modules/aws-cdk/bin)

~ $ curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3

~ $ chmod 700 get_helm.sh

~ $ ls -l get_helm.sh

-rwx------. 1 cloudshell-user cloudshell-user 11913 Jul 5 22:43 get_helm.sh

~ $ ./get_helm.sh

Downloading https://get.helm.sh/helm-v3.18.3-linux-amd64.tar.gz

Verifying checksum... Done.

Preparing to install helm into /usr/local/bin

helm installed into /usr/local/bin/helm

~ $ which helm

/usr/local/bin/helm

~ $ helm version

version.BuildInfo{Version:"v3.18.3", GitCommit:"6838ebcf265a3842d1433956e8a622e3290cf324", GitTreeState:"clean", GoVersion:"go1.24.4"}

ハンズオン4:EKS クラスタ作成

EKS 構築のための環境変数をセット

- ハンズオンに利用する環境に合わせて、環境変数を設定します。

- 後述のトラブルシューティングに使用するため、KARPENTER_VERSION に互換性がないバージョン「1.1.1」を設定しています。トラブルシューティングをスキップする場合は、「1.1.5」に変更ください。

export KARPENTER_NAMESPACE="karpenter"

export KARPENTER_VERSION="1.1.1"

export K8S_VERSION="1.33"

export AWS_PARTITION=$(aws sts get-caller-identity --query "Arn" | cut -d: -f2)

export AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text)

export AWS_DEFAULT_REGION="us-west-2"

export CLUSTER_NAME="niikawa-karpenter-demo"

export TEMPOUT="$(mktemp)"

export ALIAS_VERSION="$(aws ssm get-parameter --name "/aws/service/eks/optimized-ami/${K8S_VERSION}/amazon-linux-2023/x86_64/standard/recommended/image_id" --query Parameter.Value | xargs aws ec2 describe-images --query 'Images[0].Name' --image-ids | sed -r 's/^.*(v[[:digit:]]+).*$/\1/')"

export VPC_ID="vpc-0bc25a2c291abb4fa"

export AWS_AVAILABILITY_ZONE_A="us-west-2a"

export AWS_AVAILABILITY_ZONE_B="us-west-2b"

export PRIVATE_SUBNET_A_ID="subnet-0f5ac1ad37caadba7"

export PRIVATE_SUBNET_B_ID="subnet-03de07e15a1f369cb"

export PUBLIC_SUBNET_A_ID="subnet-0ad846fe5448c1bbd"

export PUBLIC_SUBNET_B_ID="subnet-0ba5b930ebdac3688"

Cloud Formation によるEKS 関連リソースの構築

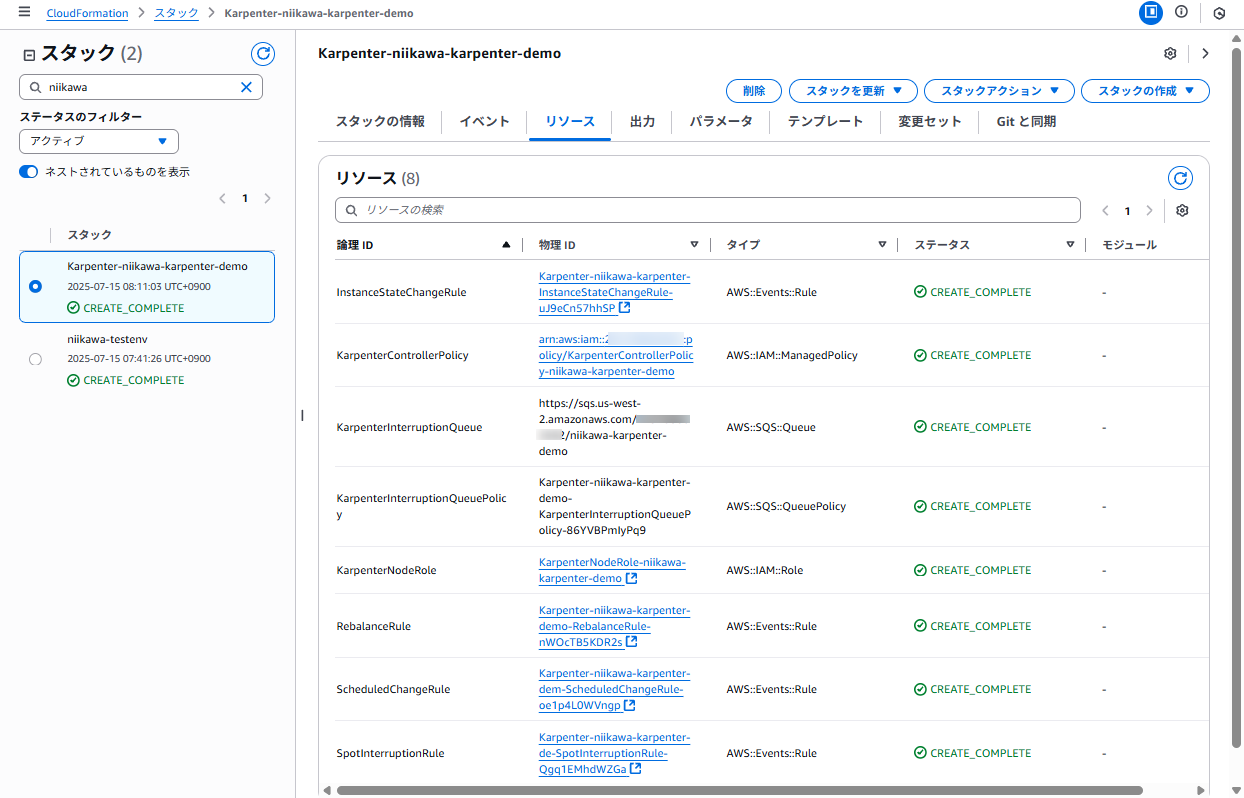

- Karpenter 公式サイトの手順に従い、Cloud Formation を利用して以下のリソースを作成します。

- Karpenterが起動するEC2ノード用のIAMロール (リソース名: KarpenterNodeRole)

- Karpenterコントローラー用のIAMポリシー (リソース名: KarpenterControllerPolicy)

- インスタンス中断を処理するためのSQSキュー

- イベントをSQSキューに転送するためのEventBridgeルール

~ $ curl -fsSL https://raw.githubusercontent.com/aws/karpenter-provider-aws/v"${KARPENTER_VERSION}"/website/content/en/preview/getting-started/getting-started-with-karpenter/cloudformation.yaml > "${TEMPOUT}" \

> && aws cloudformation deploy \

> --stack-name "Karpenter-${CLUSTER_NAME}" \

> --template-file "${TEMPOUT}" \

> --capabilities CAPABILITY_NAMED_IAM \

> --parameter-overrides "ClusterName=${CLUSTER_NAME}"

Waiting for changeset to be created..

Waiting for stack create/update to complete

Successfully created/updated stack - Karpenter-niikawa-karpenter-demo

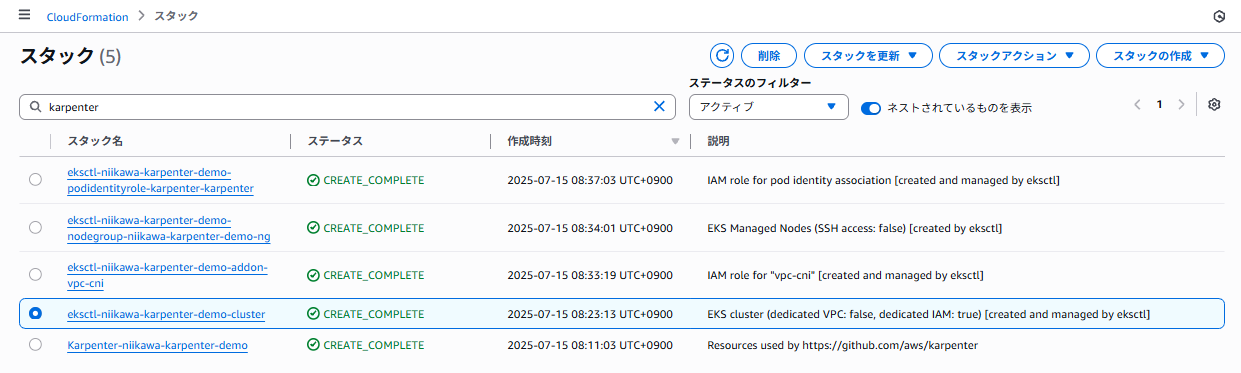

- 以下は、Cloud Formation のコンソール画面です。上記のコマンドによって作成されたリソースが分かります。

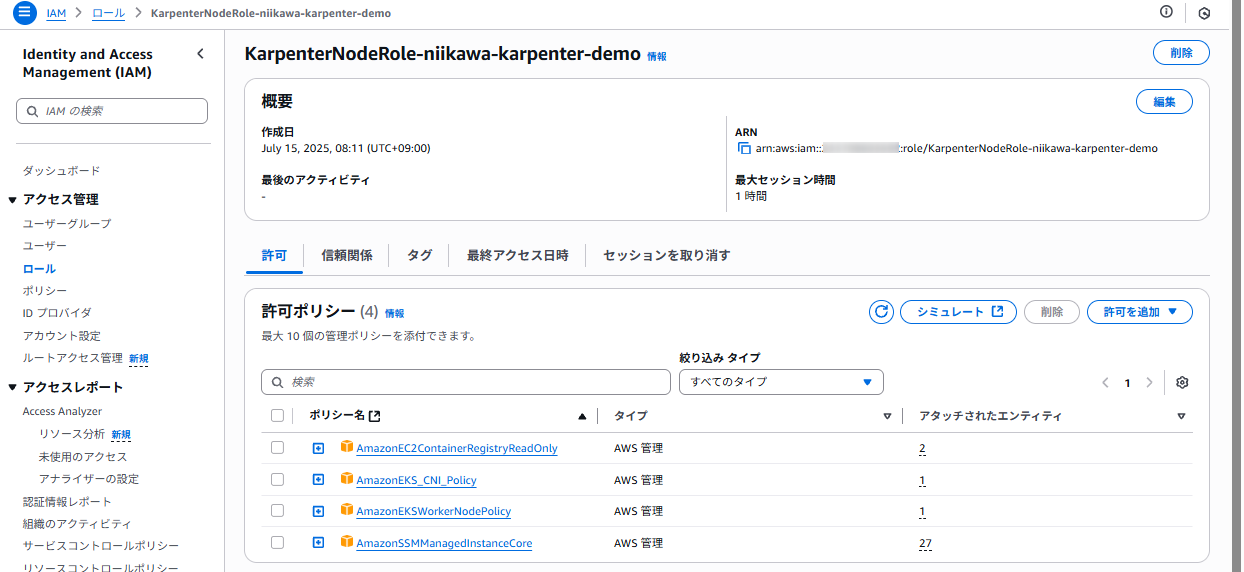

- IAMロールに関しては、以下のリソースが作成されました。(KarpenterNodeRole-[CLUSTER_NAME])

- これは、KarpenterがPodの要求に応じて、新たに起動するEC2ノードにインスタンスプロファイルとして割り当てるIAMロールです。ノードはこの権限を使用して、ECRからイメージをpull したり、CloudWatchにログを送ったりします。

- ノードで動作する[kubeletデーモン] → EKSコントロールプレーンに対して認証を行い、クラスタに参加します。

- 詳細は、こちらのドキュメントを参照。

eksctl create コマンドによるEKS クラスタ作成

- Karpenter 公式サイトのテンプレートを基に、YAML を準備します。

- 今回は、以下のテンプレートを使用します。

- 公式サイトのYAML との違いは新規にVPC を作成せず、既存のVPC、サブネットにリソースを配置する設定に変更しています。

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: ${CLUSTER_NAME}

region: ${AWS_DEFAULT_REGION}

version: "${K8S_VERSION}"

tags:

karpenter.sh/discovery: ${CLUSTER_NAME}

vpc:

id: ${VPC_ID}

subnets:

private:

${AWS_AVAILABILITY_ZONE_A}: { id: ${PRIVATE_SUBNET_A_ID} }

${AWS_AVAILABILITY_ZONE_B}: { id: ${PRIVATE_SUBNET_B_ID} }

public:

${AWS_AVAILABILITY_ZONE_A}: { id: ${PUBLIC_SUBNET_A_ID} }

${AWS_AVAILABILITY_ZONE_B}: { id: ${PUBLIC_SUBNET_B_ID} }

clusterEndpoints:

publicAccess: true

privateAccess: false

iam:

withOIDC: true

podIdentityAssociations:

- namespace: "${KARPENTER_NAMESPACE}"

serviceAccountName: karpenter

roleName: ${CLUSTER_NAME}-karpenter

permissionPolicyARNs:

- arn:${AWS_PARTITION}:iam::${AWS_ACCOUNT_ID}:policy/KarpenterControllerPolicy-${CLUSTER_NAME}

iamIdentityMappings:

- arn: "arn:${AWS_PARTITION}:iam::${AWS_ACCOUNT_ID}:role/KarpenterNodeRole-${CLUSTER_NAME}"

username: system:node:{{EC2PrivateDNSName}}

groups:

- system:bootstrappers

- system:nodes

managedNodeGroups:

- name: ${CLUSTER_NAME}-ng

instanceType: m5.large

amiFamily: AmazonLinux2023

desiredCapacity: 2

minSize: 1

maxSize: 5

privateNetworking: true

subnets:

- ${PRIVATE_SUBNET_A_ID}

- ${PRIVATE_SUBNET_B_ID}

addons:

- name: eks-pod-identity-agent

- cat コマンドを使用し、上記テンプレートを基にYAML を作成します。以下オペレーションの通り、cat コマンドの次にテンプレートを貼り付け、続けて"EOF" を入力します。

- 念のため、head コマンドを使用し、YAML に正しく環境変数がセットされたことを確認しています。

~ $ cat <<EOF > karpenter-cluster.yaml

> apiVersion: eksctl.io/v1alpha5

> kind: ClusterConfig

>

> metadata:

> name: ${CLUSTER_NAME}

> region: ${AWS_DEFAULT_REGION}

> version: "${K8S_VERSION}"

> tags:

> karpenter.sh/discovery: ${CLUSTER_NAME}

>

> vpc:

> id: ${VPC_ID}

> subnets:

> private:

> ${AWS_AVAILABILITY_ZONE_A}: { id: ${PRIVATE_SUBNET_A_ID} }

> ${AWS_AVAILABILITY_ZONE_B}: { id: ${PRIVATE_SUBNET_B_ID} }

> public:

> ${AWS_AVAILABILITY_ZONE_A}: { id: ${PUBLIC_SUBNET_A_ID} }

> ${AWS_AVAILABILITY_ZONE_B}: { id: ${PUBLIC_SUBNET_B_ID} }

> clusterEndpoints:

> publicAccess: true

> privateAccess: false

>

> iam:

> withOIDC: true

> podIdentityAssociations:

> - namespace: "${KARPENTER_NAMESPACE}"

> serviceAccountName: karpenter

> roleName: ${CLUSTER_NAME}-karpenter

> permissionPolicyARNs:

> - arn:${AWS_PARTITION}:iam::${AWS_ACCOUNT_ID}:policy/KarpenterControllerPolicy-${CLUSTER_NAME}

>

> iamIdentityMappings:

> - arn: "arn:${AWS_PARTITION}:iam::${AWS_ACCOUNT_ID}:role/KarpenterNodeRole-${CLUSTER_NAME}"

> username: system:node:{{EC2PrivateDNSName}}

> groups:

> - system:bootstrappers

> - system:nodes

>

> managedNodeGroups:

> - name: ${CLUSTER_NAME}-ng

> instanceType: m5.large

> amiFamily: AmazonLinux2023

> desiredCapacity: 2

> minSize: 1

> maxSize: 5

> privateNetworking: true

> subnets:

> - ${PRIVATE_SUBNET_A_ID}

> - ${PRIVATE_SUBNET_B_ID}

>

> addons:

> - name: eks-pod-identity-agent

> EOF

~ $

~ $ head karpenter-cluster.yaml

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: niikawa-karpenter-demo

region: us-west-2

version: "1.33"

tags:

karpenter.sh/discovery: niikawa-karpenter-demo

~ $

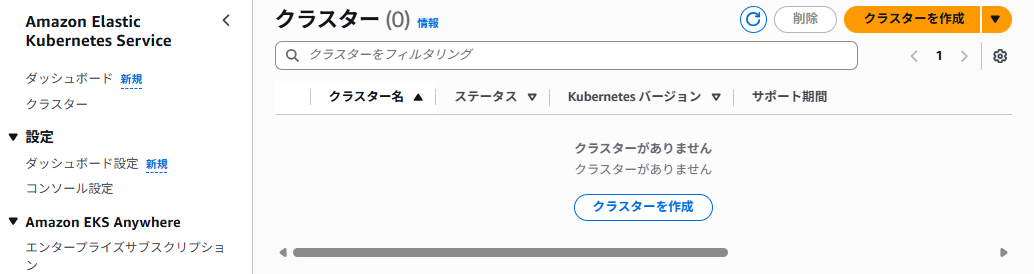

- 今の時点では、EKS クラスタは作成されていません。

- それでは、eksctl create コマンドを実行します。

- 以下のコマンドはEKS クラスタの作成に成功していますが、当初はいくつかのVPC エンドポイントを作成していたため、EKS クラスタの作成に失敗していました。

~ $ eksctl create cluster -f karpenter-cluster.yaml

2025-07-14 23:23:13 [ℹ] eksctl version 0.210.0

2025-07-14 23:23:13 [ℹ] using region us-west-2

2025-07-14 23:23:13 [✔] using existing VPC (vpc-0bc25a2c291abb4fa) and subnets (private:map[us-west-2a:{subnet-0f5ac1ad37caadba7 us-west-2a 10.192.20.0/24 0 } us-west-2b:{subnet-03de07e15a1f369cb us-west-2b 10.192.21.0/24 0 }] public:map[us-west-2a:{subnet-0ad846fe5448c1bbd us-west-2a 10.192.10.0/24 0 } us-west-2b:{subnet-0ba5b930ebdac3688 us-west-2b 10.192.11.0/24 0 }])

2025-07-14 23:23:13 [!] custom VPC/subnets will be used; if resulting cluster doesn't function as expected, make sure to review the configuration of VPC/subnets

2025-07-14 23:23:13 [ℹ] nodegroup "niikawa-karpenter-demo-ng" will use "" [AmazonLinux2023/1.33]

2025-07-14 23:23:13 [ℹ] using Kubernetes version 1.33

2025-07-14 23:23:13 [ℹ] creating EKS cluster "niikawa-karpenter-demo" in "us-west-2" region with managed nodes

2025-07-14 23:23:13 [ℹ] 1 nodegroup (niikawa-karpenter-demo-ng) was included (based on the include/exclude rules)

2025-07-14 23:23:13 [ℹ] will create a CloudFormation stack for cluster itself and 1 managed nodegroup stack(s)

2025-07-14 23:23:13 [ℹ] if you encounter any issues, check CloudFormation console or try 'eksctl utils describe-stacks --region=us-west-2 --cluster=niikawa-karpenter-demo'

2025-07-14 23:23:13 [ℹ] Kubernetes API endpoint access will use default of {publicAccess=true, privateAccess=false} for cluster "niikawa-karpenter-demo" in "us-west-2"

2025-07-14 23:23:13 [ℹ] CloudWatch logging will not be enabled for cluster "niikawa-karpenter-demo" in "us-west-2"

2025-07-14 23:23:13 [ℹ] you can enable it with 'eksctl utils update-cluster-logging --enable-types={SPECIFY-YOUR-LOG-TYPES-HERE (e.g. all)} --region=us-west-2 --cluster=niikawa-karpenter-demo'

2025-07-14 23:23:13 [ℹ] default addons metrics-server, vpc-cni, kube-proxy, coredns were not specified, will install them as EKS addons

2025-07-14 23:23:13 [ℹ]

2 sequential tasks: { create cluster control plane "niikawa-karpenter-demo",

2 sequential sub-tasks: {

6 sequential sub-tasks: {

1 task: { create addons },

wait for control plane to become ready,

associate IAM OIDC provider,

no tasks,

update VPC CNI to use IRSA if required,

create IAM identity mappings,

},

create managed nodegroup "niikawa-karpenter-demo-ng",

}

}

2025-07-14 23:23:13 [ℹ] building cluster stack "eksctl-niikawa-karpenter-demo-cluster"

2025-07-14 23:23:14 [ℹ] deploying stack "eksctl-niikawa-karpenter-demo-cluster"

2025-07-14 23:23:44 [ℹ] waiting for CloudFormation stack "eksctl-niikawa-karpenter-demo-cluster"

2025-07-14 23:24:14 [ℹ] waiting for CloudFormation stack "eksctl-niikawa-karpenter-demo-cluster"

2025-07-14 23:25:14 [ℹ] waiting for CloudFormation stack "eksctl-niikawa-karpenter-demo-cluster"

2025-07-14 23:26:14 [ℹ] waiting for CloudFormation stack "eksctl-niikawa-karpenter-demo-cluster"

2025-07-14 23:27:14 [ℹ] waiting for CloudFormation stack "eksctl-niikawa-karpenter-demo-cluster"

2025-07-14 23:28:14 [ℹ] waiting for CloudFormation stack "eksctl-niikawa-karpenter-demo-cluster"

2025-07-14 23:29:14 [ℹ] waiting for CloudFormation stack "eksctl-niikawa-karpenter-demo-cluster"

2025-07-14 23:30:14 [ℹ] waiting for CloudFormation stack "eksctl-niikawa-karpenter-demo-cluster"

2025-07-14 23:31:14 [ℹ] waiting for CloudFormation stack "eksctl-niikawa-karpenter-demo-cluster"

2025-07-14 23:31:15 [ℹ] creating addon: eks-pod-identity-agent

2025-07-14 23:31:16 [ℹ] successfully created addon: eks-pod-identity-agent

2025-07-14 23:31:16 [ℹ] creating addon: metrics-server

2025-07-14 23:31:16 [ℹ] successfully created addon: metrics-server

2025-07-14 23:31:17 [!] recommended policies were found for "vpc-cni" addon, but since OIDC is disabled on the cluster, eksctl cannot configure the requested permissions; the recommended way to provide IAM permissions for "vpc-cni" addon is via pod identity associations; after addon creation is completed, add all recommended policies to the config file, under `addon.PodIdentityAssociations`, and run `eksctl update addon`

2025-07-14 23:31:17 [ℹ] creating addon: vpc-cni

2025-07-14 23:31:17 [ℹ] successfully created addon: vpc-cni

2025-07-14 23:31:17 [ℹ] creating addon: kube-proxy

2025-07-14 23:31:17 [ℹ] successfully created addon: kube-proxy

2025-07-14 23:31:18 [ℹ] creating addon: coredns

2025-07-14 23:31:18 [ℹ] successfully created addon: coredns

2025-07-14 23:33:19 [ℹ] addon "vpc-cni" active

2025-07-14 23:33:20 [ℹ] deploying stack "eksctl-niikawa-karpenter-demo-addon-vpc-cni"

2025-07-14 23:33:20 [ℹ] waiting for CloudFormation stack "eksctl-niikawa-karpenter-demo-addon-vpc-cni"

2025-07-14 23:33:50 [ℹ] waiting for CloudFormation stack "eksctl-niikawa-karpenter-demo-addon-vpc-cni"

2025-07-14 23:33:50 [ℹ] updating addon

2025-07-14 23:34:00 [ℹ] addon "vpc-cni" active

2025-07-14 23:34:00 [ℹ] checking arn arn:aws:iam::111111111111:role/KarpenterNodeRole-niikawa-karpenter-demo against entries in the auth ConfigMap

2025-07-14 23:34:00 [ℹ] adding identity "arn:aws:iam::111111111111:role/KarpenterNodeRole-niikawa-karpenter-demo" to auth ConfigMap

2025-07-14 23:34:01 [ℹ] building managed nodegroup stack "eksctl-niikawa-karpenter-demo-nodegroup-niikawa-karpenter-demo-ng"

2025-07-14 23:34:01 [ℹ] deploying stack "eksctl-niikawa-karpenter-demo-nodegroup-niikawa-karpenter-demo-ng"

2025-07-14 23:34:01 [ℹ] waiting for CloudFormation stack "eksctl-niikawa-karpenter-demo-nodegroup-niikawa-karpenter-demo-ng"

2025-07-14 23:34:31 [ℹ] waiting for CloudFormation stack "eksctl-niikawa-karpenter-demo-nodegroup-niikawa-karpenter-demo-ng"

2025-07-14 23:35:12 [ℹ] waiting for CloudFormation stack "eksctl-niikawa-karpenter-demo-nodegroup-niikawa-karpenter-demo-ng"

2025-07-14 23:37:02 [ℹ] waiting for CloudFormation stack "eksctl-niikawa-karpenter-demo-nodegroup-niikawa-karpenter-demo-ng"

2025-07-14 23:37:02 [ℹ] waiting for the control plane to become ready

2025-07-14 23:37:03 [✔] saved kubeconfig as "/home/cloudshell-user/.kube/config"

2025-07-14 23:37:03 [ℹ] no tasks

2025-07-14 23:37:03 [✔] all EKS cluster resources for "niikawa-karpenter-demo" have been created

2025-07-14 23:37:03 [ℹ] nodegroup "niikawa-karpenter-demo-ng" has 2 node(s)

2025-07-14 23:37:03 [ℹ] node "ip-10-192-20-142.us-west-2.compute.internal" is ready

2025-07-14 23:37:03 [ℹ] node "ip-10-192-21-220.us-west-2.compute.internal" is ready

2025-07-14 23:37:03 [ℹ] waiting for at least 1 node(s) to become ready in "niikawa-karpenter-demo-ng"

2025-07-14 23:37:03 [ℹ] nodegroup "niikawa-karpenter-demo-ng" has 2 node(s)

2025-07-14 23:37:03 [ℹ] node "ip-10-192-20-142.us-west-2.compute.internal" is ready

2025-07-14 23:37:03 [ℹ] node "ip-10-192-21-220.us-west-2.compute.internal" is ready

2025-07-14 23:37:03 [✔] created 1 managed nodegroup(s) in cluster "niikawa-karpenter-demo"

2025-07-14 23:37:03 [ℹ] 1 task: {

2 sequential sub-tasks: {

create IAM role for pod identity association for service account "karpenter/karpenter",

create pod identity association for service account "karpenter/karpenter",

} }2025-07-14 23:37:03 [ℹ] deploying stack "eksctl-niikawa-karpenter-demo-podidentityrole-karpenter-karpenter"

2025-07-14 23:37:03 [ℹ] waiting for CloudFormation stack "eksctl-niikawa-karpenter-demo-podidentityrole-karpenter-karpenter"

2025-07-14 23:37:33 [ℹ] waiting for CloudFormation stack "eksctl-niikawa-karpenter-demo-podidentityrole-karpenter-karpenter"

2025-07-14 23:37:34 [ℹ] created pod identity association for service account "karpenter" in namespace "karpenter"

2025-07-14 23:37:34 [ℹ] all tasks were completed successfully

2025-07-14 23:37:35 [ℹ] kubectl command should work with "/home/cloudshell-user/.kube/config", try 'kubectl get nodes'

2025-07-14 23:37:35 [✔] EKS cluster "niikawa-karpenter-demo" in "us-west-2" region is ready

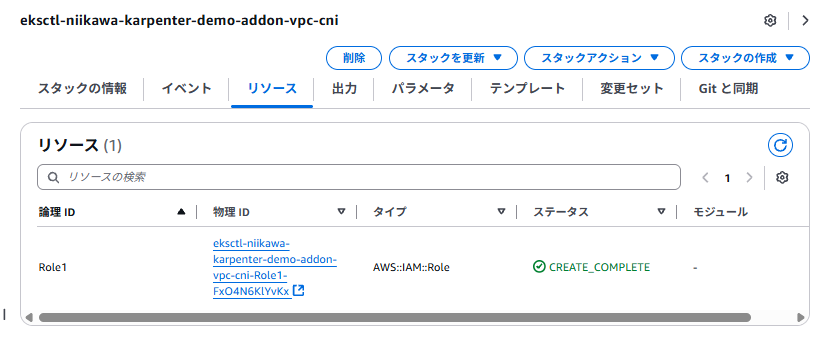

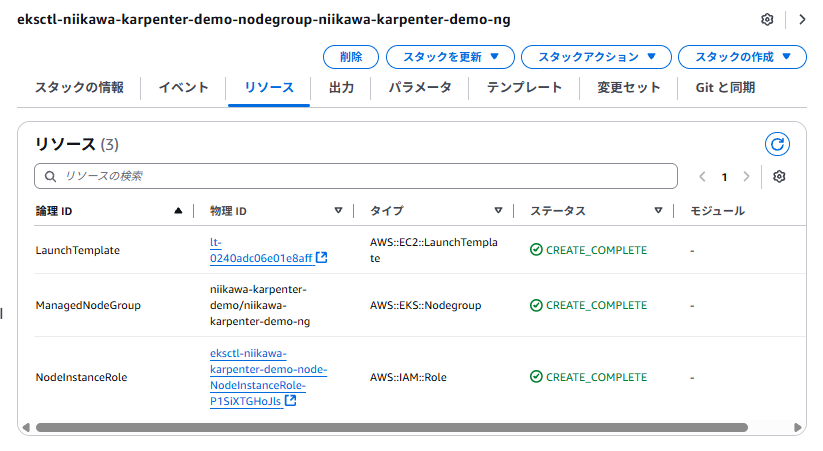

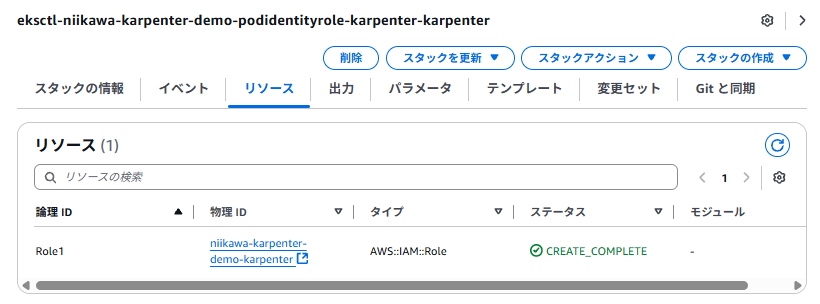

- 以下は、Cloud Formation のコンソール画面です。新たに、4つのスタックが作成されたことが分かります。

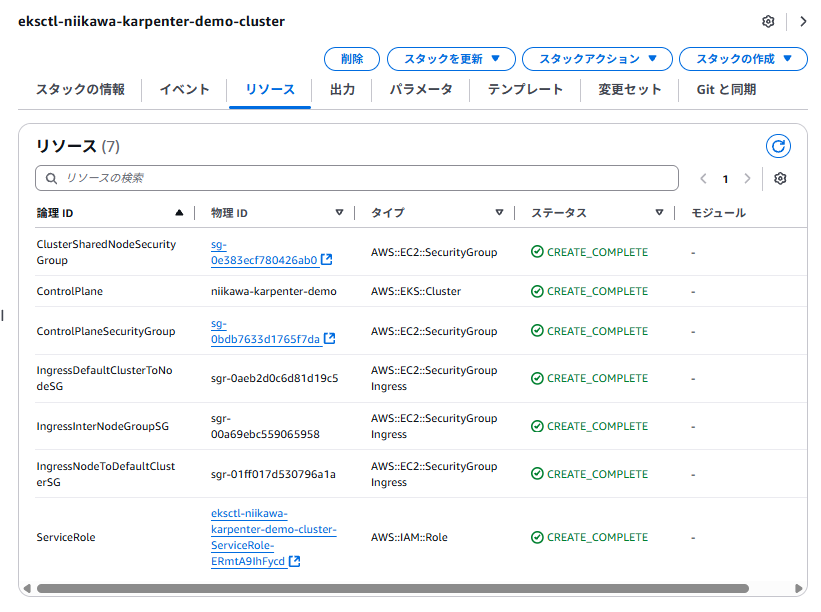

- 各スタックで作成されたリソースは、以下の通りです。

- 以下のスタックでは、次のIAMロールが作成されます。(eksctl-[CLUSTER_NAME]-node-NodeInstanceRole-xxxxxxxxxxxx)

- eksctl create cluster コマンドで作成された初期のノードに所属するEC2 ノードに使用するIAMロールです。

- このIAM ロールはIAM アクセスエントリに使われます。このEC2 ノード上で動作する[kubelet]が、EKSコントロールプレーンに対してクラスタへの参加を申告する際に使用します。

- コンテナイメージをECRからプルするための権限や、EKSワーカーノードとして機能するための基本的なポリシーがアタッチされています。

- 以下のスタックでは、次のIAMロールが作成されます。([CLUSTER_NAME]-karpenter)

- このIAM ロールはPod Identity に使われます。Kubernetesのスケジューラがノードの上に、KarpenterのコントローラーPodを配置します。このPodの役割は、他のPodの数に応じて「新しいEC2ノードを自ら作成・削除する」という非常に強力なものです。

- EKSは、このKarpenterのコントローラーPodに対してだけ、Pod Identity 用のロールを渡します。

- 以下は、eksctl create コマンド実行後のEKS のコンソール画面です。EKS クラスタやノードグループが作成されたことを確認します。

- 次のeksctl コマンドを使用し、EKS クラスタやノードグループが作成されたことを確認します。

~ $ eksctl get cluster

NAME REGION EKSCTL CREATED

niikawa-karpenter-demo us-west-2 True

~ $ kubectl cluster-info

Kubernetes control plane is running at https://694AE41B50174BD885DD8EAA01E91626.gr7.us-west-2.eks.amazonaws.com

CoreDNS is running at https://694AE41B50174BD885DD8EAA01E91626.gr7.us-west-2.eks.amazonaws.com/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

~ $ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-10-192-20-142.us-west-2.compute.internal Ready <none> 11m v1.33.0-eks-802817d

ip-10-192-21-220.us-west-2.compute.internal Ready <none> 11m v1.33.0-eks-802817d

- 続けて、Karpenter 公式サイトの手順に従い、以下の環境変数をセットします。

export CLUSTER_ENDPOINT="$(aws eks describe-cluster --name "${CLUSTER_NAME}" --query "cluster.endpoint" --output text)"

export KARPENTER_IAM_ROLE_ARN="arn:${AWS_PARTITION}:iam::${AWS_ACCOUNT_ID}:role/${CLUSTER_NAME}-karpenter"

echo "${CLUSTER_ENDPOINT} ${KARPENTER_IAM_ROLE_ARN}"

ハンズオン5:Karpenter インストール

Karpenter のインストール

- ここからは、Karpenter オートスケーラーをインストールします。

- 以下のhelm コマンドを実行します。

helm upgrade --install karpenter oci://public.ecr.aws/karpenter/karpenter --version "${KARPENTER_VERSION}" --namespace "${KARPENTER_NAMESPACE}" --create-namespace \

--set "settings.clusterName=${CLUSTER_NAME}" \

--set "settings.interruptionQueue=${CLUSTER_NAME}" \

--set controller.resources.requests.cpu=1 \

--set controller.resources.requests.memory=1Gi \

--set controller.resources.limits.cpu=1 \

--set controller.resources.limits.memory=1Gi \

--wait

トラブルシューティング①

- ここからはトラブルシューティングになります。

- 次のログにある通り、helm コマンドが失敗し、「Error: context deadline exceeded」と表示されました。

- ここでは、kubectl get all コマンド、kubectl logs コマンドを使用して、調査を行います。

- Pod のSTATUS は、「CrashLoopBackOff」となり、再起動を繰り返しているようです。

- ログからは、「panic: validating kubernetes version, karpenter version is not compatible with K8s version 1.33」が確認できます。

- これは、K8S_VERSION、KARPENTER_VERSION のバージョンに互換性がないことが原因でした。環境変数を「KARPENTER_VERSION="1.1.5″」に変更し、リトライします。

~ $ helm upgrade --install karpenter oci://public.ecr.aws/karpenter/karpenter --version "${KARPENTER_VERSION}" --namespace "${KARPENTER_NAMESPACE}" --create-namespace \

> --set "settings.clusterName=${CLUSTER_NAME}" \

> --set "settings.interruptionQueue=${CLUSTER_NAME}" \

> --set controller.resources.requests.cpu=1 \

> --set controller.resources.requests.memory=1Gi \

> --set controller.resources.limits.cpu=1 \

> --set controller.resources.limits.memory=1Gi \

> --wait

Release "karpenter" does not exist. Installing it now.

Pulled: public.ecr.aws/karpenter/karpenter:1.1.1

Digest: sha256:b42c6d224e7b19eafb65e2d440734027a8282145569d4d142baf10ba495e90d0

Error: context deadline exceeded

~ $ kubectl get namespace

NAME STATUS AGE

default Active 59m

karpenter Active 35m

kube-node-lease Active 59m

kube-public Active 59m

kube-system Active 59m

~ $ kubectl get all -n karpenter

NAME READY STATUS RESTARTS AGE

pod/karpenter-9bd684877-2bjnw 0/1 CrashLoopBackOff 11 (3m46s ago) 35m

pod/karpenter-9bd684877-pkzqv 0/1 CrashLoopBackOff 11 (4m1s ago) 35m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/karpenter ClusterIP 172.20.42.85 <none> 8080/TCP 35m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/karpenter 0/2 2 0 35m

NAME DESIRED CURRENT READY AGE

replicaset.apps/karpenter-9bd684877 2 2 0 35m

~ $ kubectl logs -n karpenter karpenter-9bd684877-2bjnw

panic: validating kubernetes version, karpenter version is not compatible with K8s version 1.33

goroutine 1 [running]:

github.com/samber/lo.must({0x38c81e0, 0xc0008c8560}, {0x0, 0x0, 0x0})

github.com/samber/lo@v1.47.0/errors.go:53 +0x1df

github.com/samber/lo.Must0(...)

github.com/samber/lo@v1.47.0/errors.go:72

github.com/aws/karpenter-provider-aws/pkg/operator.NewOperator({0x42d15e0, 0xc0006f3aa0}, 0xc000532880)

github.com/aws/karpenter-provider-aws/pkg/operator/operator.go:155 +0xf7c

main.main()

github.com/aws/karpenter-provider-aws/cmd/controller/main.go:28 +0x2a

~ $ kubectl logs -n karpenter karpenter-9bd684877-pkzqv

panic: validating kubernetes version, karpenter version is not compatible with K8s version 1.33

goroutine 1 [running]:

github.com/samber/lo.must({0x38c81e0, 0xc000ad6160}, {0x0, 0x0, 0x0})

github.com/samber/lo@v1.47.0/errors.go:53 +0x1df

github.com/samber/lo.Must0(...)

github.com/samber/lo@v1.47.0/errors.go:72

github.com/aws/karpenter-provider-aws/pkg/operator.NewOperator({0x42d15e0, 0xc0004a6600}, 0xc00054f540)

github.com/aws/karpenter-provider-aws/pkg/operator/operator.go:155 +0xf7c

main.main()

github.com/aws/karpenter-provider-aws/cmd/controller/main.go:28 +0x2a

- 以下は、リトライ後の結果です。

- 次のログでは、helm コマンドが成功しました。Pod のSTATUS も「Running」になりました。

~ $ echo $KARPENTER_VERSION

1.1.1

~ $ export KARPENTER_VERSION="1.1.5"

~ $ echo $KARPENTER_VERSION

1.1.5

~ $ helm upgrade --install karpenter oci://public.ecr.aws/karpenter/karpenter --version "${KARPENTER_VERSION}" --namespace "${KARPENTER_NAMESPACE}" --create-namespace \

> --set "settings.clusterName=${CLUSTER_NAME}" \

> --set "settings.interruptionQueue=${CLUSTER_NAME}" \

> --set controller.resources.requests.cpu=1 \

> --set controller.resources.requests.memory=1Gi \

> --set controller.resources.limits.cpu=1 \

> --set controller.resources.limits.memory=1Gi \

> --wait

Pulled: public.ecr.aws/karpenter/karpenter:1.1.5

Digest: sha256:0fad8d11f9849eb887b301945975ff8214e4aebb06d9cdc5c6ad8b3a271c2273

Release "karpenter" has been upgraded. Happy Helming!

NAME: karpenter

LAST DEPLOYED: Tue Jul 15 00:32:27 2025

NAMESPACE: karpenter

STATUS: deployed

REVISION: 2

TEST SUITE: None

~ $ kubectl get namespace

NAME STATUS AGE

default Active 64m

karpenter Active 40m

kube-node-lease Active 64m

kube-public Active 64m

kube-system Active 64m

~ $ kubectl get all -n karpenter

NAME READY STATUS RESTARTS AGE

pod/karpenter-6f98ccbdbc-2g9kh 1/1 Running 0 41s

pod/karpenter-6f98ccbdbc-jm8tq 1/1 Running 0 41s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/karpenter ClusterIP 172.20.42.85 <none> 8080/TCP 40m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/karpenter 2/2 2 2 40m

NAME DESIRED CURRENT READY AGE

replicaset.apps/karpenter-6f98ccbdbc 2 2 2 41s

replicaset.apps/karpenter-9bd684877 0 0 0 40m

Karpenter NodePool の作成

- まだKarpenter の準備は整っていません。次は、Karpenter NodePool を作成します。

- Karpenter 公式サイトのテンプレートを基に、YAML を準備します。

- 今回は、以下のテンプレートを使用します。

apiVersion: karpenter.sh/v1

kind: NodePool

metadata:

name: default

spec:

template:

spec:

requirements:

- key: kubernetes.io/arch

operator: In

values: ["amd64"]

- key: kubernetes.io/os

operator: In

values: ["linux"]

- key: karpenter.sh/capacity-type

operator: In

values: ["on-demand"]

- key: karpenter.k8s.aws/instance-category

operator: In

values: ["c", "m", "r"]

- key: karpenter.k8s.aws/instance-generation

operator: Gt

values: ["2"]

nodeClassRef:

group: karpenter.k8s.aws

kind: EC2NodeClass

name: default

expireAfter: 720h # 30 * 24h = 720h

limits:

cpu: 1000

disruption:

consolidationPolicy: WhenEmptyOrUnderutilized

consolidateAfter: 1m

---

apiVersion: karpenter.k8s.aws/v1

kind: EC2NodeClass

metadata:

name: default

spec:

role: "KarpenterNodeRole-${CLUSTER_NAME}" # replace with your cluster name

amiSelectorTerms:

- alias: "al2023@${ALIAS_VERSION}"

subnetSelectorTerms:

- tags:

karpenter.sh/discovery: "${CLUSTER_NAME}" # replace with your cluster name

securityGroupSelectorTerms:

- tags:

karpenter.sh/discovery: "${CLUSTER_NAME}" # replace with your cluster name

- cat コマンドを使用し、上記テンプレートを基にYAML を作成します。以下オペレーションの通り、cat コマンドの次にテンプレートを貼り付け、続けて"EOF" を入力します。

- 念のため、head コマンドを使用し、YAML に正しく環境変数がセットされたことを確認しています。

~ $ cat <<EOF > karpenter-rules.yaml

> apiVersion: karpenter.sh/v1

> kind: NodePool

> metadata:

> name: default

> spec:

> template:

> spec:

> requirements:

> - key: kubernetes.io/arch

> operator: In

> values: ["amd64"]

> - key: kubernetes.io/os

> operator: In

> values: ["linux"]

> - key: karpenter.sh/capacity-type

> operator: In

> values: ["on-demand"]

> - key: karpenter.k8s.aws/instance-category

> operator: In

> values: ["c", "m", "r"]

> - key: karpenter.k8s.aws/instance-generation

> operator: Gt

> values: ["2"]

> nodeClassRef:

> group: karpenter.k8s.aws

> kind: EC2NodeClass

> name: default

> expireAfter: 720h # 30 * 24h = 720h

> limits:

> cpu: 1000

> disruption:

> consolidationPolicy: WhenEmptyOrUnderutilized

> consolidateAfter: 1m

> ---

> apiVersion: karpenter.k8s.aws/v1

> kind: EC2NodeClass

> metadata:

> name: default

> spec:

> role: "KarpenterNodeRole-${CLUSTER_NAME}" # replace with your cluster name

> amiSelectorTerms:

> - alias: "al2023@${ALIAS_VERSION}"

> subnetSelectorTerms:

> - tags:

> karpenter.sh/discovery: "${CLUSTER_NAME}" # replace with your cluster name

> securityGroupSelectorTerms:

> - tags:

> karpenter.sh/discovery: "${CLUSTER_NAME}" # replace with your cluster name

> EOF

~ $

~ $ head karpenter-rules.yaml

apiVersion: karpenter.sh/v1

kind: NodePool

metadata:

name: default

spec:

template:

spec:

requirements:

- key: kubernetes.io/arch

operator: In

~ $

- それでは、kubectl apply コマンドを実行します。

~ $ kubectl apply -f karpenter-rules.yaml

nodepool.karpenter.sh/default created

ec2nodeclass.karpenter.k8s.aws/default created

Karpenter のデプロイメント

- ここで、ついにアプリケーションのデプロイを行います。

- Karpenter 公式サイトのテンプレートを基に、YAML を準備します。

- 今回は、以下のテンプレートを使用します。各種name, label をハンズオンの検証用に変更していますので、ご注意ください。(例:niikawa-testenv)

- containers のimage に、ECRにプッシュしたイメージのURI を指定しています。

- 後述のトラブルシューティングに使用するため、以下のテンプレートのsecurityContext 部分のコメント (#) を外してください。トラブルシューティングをスキップする場合は、以下のテンプレートをそのまま使用ください。

apiVersion: apps/v1

kind: Deployment

metadata:

name: niikawa-testenv

spec:

replicas: 0

selector:

matchLabels:

app: niikawa-testenv

template:

metadata:

labels:

app: niikawa-testenv

spec:

terminationGracePeriodSeconds: 0

# securityContext:

# runAsUser: 1000

# runAsGroup: 3000

# fsGroup: 2000

containers:

- name: niikawa-testenv

image: 111111111111.dkr.ecr.us-west-2.amazonaws.com/niikawa-testenv:latest

resources:

requests:

cpu: 1

securityContext:

allowPrivilegeEscalation: false- cat コマンドを使用し、上記テンプレートを基にYAML を作成します。以下オペレーションの通り、cat コマンドの次にテンプレートを貼り付け、続けて"EOF" を入力します。

- 念のため、head コマンドを使用し、YAML に正しく環境変数がセットされたことを確認しています。

~ $ cat <<EOF > karpenter-deployment.yaml

> apiVersion: apps/v1

> kind: Deployment

> metadata:

> name: niikawa-testenv

> spec:

> replicas: 0

> selector:

> matchLabels:

> app: niikawa-testenv

> template:

> metadata:

> labels:

> app: niikawa-testenv

> spec:

> terminationGracePeriodSeconds: 0

> securityContext:

> runAsUser: 1000

> runAsGroup: 3000

> fsGroup: 2000

> containers:

> - name: niikawa-testenv

> image: 111111111111.dkr.ecr.us-west-2.amazonaws.com/niikawa-testenv:latest

> resources:

> requests:

> cpu: 1

> securityContext:

> allowPrivilegeEscalation: false

> EOF

~ $ head karpenter-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: niikawa-testenv

spec:

replicas: 0

selector:

matchLabels:

app: niikawa-testenv

template:

~ $

- それでは、kubectl apply コマンド、kubectl scale deployment コマンドを実行します。

~ $ kubectl apply -f karpenter-deployment.yaml

deployment.apps/niikawa-testenv created

~ $ kubectl scale deployment niikawa-testenv --replicas 5

deployment.apps/niikawa-testenv scaled

トラブルシューティング②

- ここからはトラブルシューティングになります。

- 次のログにある通り、デプロイしたPod が起動に失敗しています。

- コンソールからは、Pod のステータスが保留中になっていることが分かります。

- kubectl get pods コマンドの STATUS が Pending です。

- kubectl get events コマンドの結果、ノードが不足しており、FailedScheduling の理由でWarning に至ったことが分かります。

- kubectl logs コマンドの結果、"no subnets found" になっており、リソースを配置するSubnet が見つからなかったことが分かりました。

~ $ kubectl get pods

NAME READY STATUS RESTARTS AGE

niikawa-testenv-775767849d-5jkvn 0/1 Pending 0 7m48s

niikawa-testenv-775767849d-djjhn 0/1 Pending 0 7m48s

niikawa-testenv-775767849d-f9bkf 0/1 Pending 0 7m48s

niikawa-testenv-775767849d-gbs59 0/1 Pending 0 7m48s

niikawa-testenv-775767849d-ms9tn 0/1 Pending 0 7m48s

~ $ kubectl get events -n karpenter

LAST SEEN TYPE REASON OBJECT MESSAGE

24m Warning FailedScheduling pod/karpenter-6f98ccbdbc-2g9kh 0/2 nodes are available: 2 Insufficient cpu. preemption: 0/2 nodes are available: 2 No preemption victims found for incoming pod.

24m Normal Scheduled pod/karpenter-6f98ccbdbc-2g9kh Successfully assigned karpenter/karpenter-6f98ccbdbc-2g9kh to ip-10-192-21-220.us-west-2.compute.internal

24m Normal Pulling pod/karpenter-6f98ccbdbc-2g9kh Pulling image "public.ecr.aws/karpenter/controller:1.1.5@sha256:7a4fb72a1cf9ac26470aa5969620be3461b28f3fc990f057b5fc484db719455a"

24m Normal Pulled pod/karpenter-6f98ccbdbc-2g9kh Successfully pulled image "public.ecr.aws/karpenter/controller:1.1.5@sha256:7a4fb72a1cf9ac26470aa5969620be3461b28f3fc990f057b5fc484db719455a" in 2.464s (2.464s including waiting). Image size: 54677271 bytes.

24m Normal Created pod/karpenter-6f98ccbdbc-2g9kh Created container: controller

24m Normal Started pod/karpenter-6f98ccbdbc-2g9kh Started container controller

24m Warning FailedScheduling pod/karpenter-6f98ccbdbc-jm8tq 0/2 nodes are available: 2 Insufficient cpu. preemption: 0/2 nodes are available: 2 No preemption victims found for incoming pod.

24m Warning FailedScheduling pod/karpenter-6f98ccbdbc-jm8tq 0/2 nodes are available: 2 Insufficient cpu. preemption: 0/2 nodes are available: 2 No preemption victims found for incoming pod.

24m Normal Scheduled pod/karpenter-6f98ccbdbc-jm8tq Successfully assigned karpenter/karpenter-6f98ccbdbc-jm8tq to ip-10-192-20-142.us-west-2.compute.internal

24m Normal Pulling pod/karpenter-6f98ccbdbc-jm8tq Pulling image "public.ecr.aws/karpenter/controller:1.1.5@sha256:7a4fb72a1cf9ac26470aa5969620be3461b28f3fc990f057b5fc484db719455a"

24m Normal Pulled pod/karpenter-6f98ccbdbc-jm8tq Successfully pulled image "public.ecr.aws/karpenter/controller:1.1.5@sha256:7a4fb72a1cf9ac26470aa5969620be3461b28f3fc990f057b5fc484db719455a" in 1.983s (1.983s including waiting). Image size: 54677271 bytes.

24m Normal Created pod/karpenter-6f98ccbdbc-jm8tq Created container: controller

24m Normal Started pod/karpenter-6f98ccbdbc-jm8tq Started container controller

24m Normal SuccessfulCreate replicaset/karpenter-6f98ccbdbc Created pod: karpenter-6f98ccbdbc-2g9kh

24m Normal SuccessfulCreate replicaset/karpenter-6f98ccbdbc Created pod: karpenter-6f98ccbdbc-jm8tq

48m Normal Created pod/karpenter-9bd684877-2bjnw Created container: controller

48m Normal Started pod/karpenter-9bd684877-2bjnw Started container controller

27m Normal Pulled pod/karpenter-9bd684877-2bjnw Container image "public.ecr.aws/karpenter/controller:1.1.1@sha256:fe383abf1dbc79f164d1cbcfd8edaaf7ce97a43fbd6cb70176011ff99ce57523" already present on machine

29m Warning BackOff pod/karpenter-9bd684877-2bjnw Back-off restarting failed container controller in pod karpenter-9bd684877-2bjnw_karpenter(6ffc4a4b-58e9-4ba8-a206-e8eb448826c2)

48m Normal Created pod/karpenter-9bd684877-pkzqv Created container: controller

48m Normal Started pod/karpenter-9bd684877-pkzqv Started container controller

28m Normal Pulled pod/karpenter-9bd684877-pkzqv Container image "public.ecr.aws/karpenter/controller:1.1.1@sha256:fe383abf1dbc79f164d1cbcfd8edaaf7ce97a43fbd6cb70176011ff99ce57523" already present on machine

29m Warning BackOff pod/karpenter-9bd684877-pkzqv Back-off restarting failed container controller in pod karpenter-9bd684877-pkzqv_karpenter(cb9f3852-fdb8-428e-8206-cf3e1a71ae62)

24m Normal SuccessfulDelete replicaset/karpenter-9bd684877 Deleted pod: karpenter-9bd684877-2bjnw

24m Normal SuccessfulDelete replicaset/karpenter-9bd684877 Deleted pod: karpenter-9bd684877-pkzqv

24m Normal LeaderElection lease/karpenter-leader-election karpenter-6f98ccbdbc-2g9kh_ea914bb6-d8a8-4edc-b6c8-f9f7e404ba93 became leader

24m Normal ScalingReplicaSet deployment/karpenter Scaled up replica set karpenter-6f98ccbdbc from 0 to 1

24m Normal ScalingReplicaSet deployment/karpenter Scaled down replica set karpenter-9bd684877 from 2 to 1

24m Normal ScalingReplicaSet deployment/karpenter Scaled up replica set karpenter-6f98ccbdbc from 1 to 2

24m Normal ScalingReplicaSet deployment/karpenter Scaled down replica set karpenter-9bd684877 from 1 to 0

~ $

~ $ KARPENTER_POD_NAME=$(kubectl get pods -n karpenter -l app.kubernetes.io/name=karpenter -o jsonpath='{.items[0].metadata.name}')

~ $ kubectl logs -n karpenter ${KARPENTER_POD_NAME} | more

{"level":"ERROR","time":"2025-07-15T00:52:05.520Z","logger":"controller","message":"ignoring nodepool, not ready","commit":"4f41a6d","controller":"provisioner","namespace":"","name":"","reconcileID":"2c9295f0-df96-487e-bb88-cb041aae1fa7","NodePool":{"name":"default"}}

{"level":"INFO","time":"2025-07-15T00:52:05.520Z","logger":"controller","message":"no nodepools found","commit":"4f41a6d","controller":"provisioner","namespace":"","name":"","reconcileID":"2c9295f0-df96-487e-bb88-cb041aae1fa7"}

{"level":"ERROR","time":"2025-07-15T00:52:13.593Z","logger":"controller","message":"failed listing instance types for default","commit":"4f41a6d","controller":"disruption","namespace":"","name":"","reconcileID":"6e55a27a-6aae-44c7-8281-7c5aca688074","error":"no subnets found"}

{"level":"ERROR","time":"2025-07-15T00:52:13.593Z","logger":"controller","message":"failed listing instance types for default","commit":"4f41a6d","controller":"disruption","namespace":"","name":"","reconcileID":"6e55a27a-6aae-44c7-8281-7c5aca688074","error":"no subnets found"}

{"level":"ERROR","time":"2025-07-15T00:52:13.594Z","logger":"controller","message":"failed listing instance types for default","commit":"4f41a6d","controller":"disruption","namespace":"","name":"","reconcileID":"6e55a27a-6aae-44c7-8281-7c5aca688074","error":"no subnets found"}

{"level":"ERROR","time":"2025-07-15T00:52:13.594Z","logger":"controller","message":"failed listing instance types for default","commit":"4f41a6d","controller":"disruption","namespace":"","name":"","reconcileID":"6e55a27a-6aae-44c7-8281-7c5aca688074","error":"no subnets found"}

{"level":"ERROR","time":"2025-07-15T00:52:15.521Z","logger":"controller","message":"ignoring nodepool, not ready","commit":"4f41a6d","controller":"provisioner","namespace":"","name":"","reconcileID":"12c8d734-d8b7-40cd-9d71-19f1585fae8f","NodePool":{"name":"default"}}

{"level":"INFO","time":"2025-07-15T00:52:15.521Z","logger":"controller","message":"no nodepools found","commit":"4f41a6d","controller":"provisioner","namespace":"","name":"","reconcileID":"12c8d734-d8b7-40cd-9d71-19f1585fae8f"}

{"level":"ERROR","time":"2025-07-15T00:52:23.595Z","logger":"controller","message":"failed listing instance types for default","commit":"4f41a6d","controller":"disruption","namespace":"","name":"","reconcileID":"a82eefd6-d1b8-4f92-bd36-26defa7af21f","error":"no subnets found"}

{"level":"ERROR","time":"2025-07-15T00:52:23.595Z","logger":"controller","message":"failed listing instance types for default","commit":"4f41a6d","controller":"disruption","namespace":"","name":"","reconcileID":"a82eefd6-d1b8-4f92-bd36-26defa7af21f","error":"no subnets found"}

{"level":"ERROR","time":"2025-07-15T00:52:23.596Z","logger":"controller","message":"failed listing instance types for default","commit":"4f41a6d","controller":"disruption","namespace":"","name":"","reconcileID":"a82eefd6-d1b8-4f92-bd36-26defa7af21f","error":"no subnets found"}

{"level":"ERROR","time":"2025-07-15T00:52:23.596Z","logger":"controller","message":"failed listing instance types for default","commit":"4f41a6d","controller":"disruption","namespace":"","name":"","reconcileID":"a82eefd6-d1b8-4f92-bd36-26defa7af21f","error":"no subnets found"}

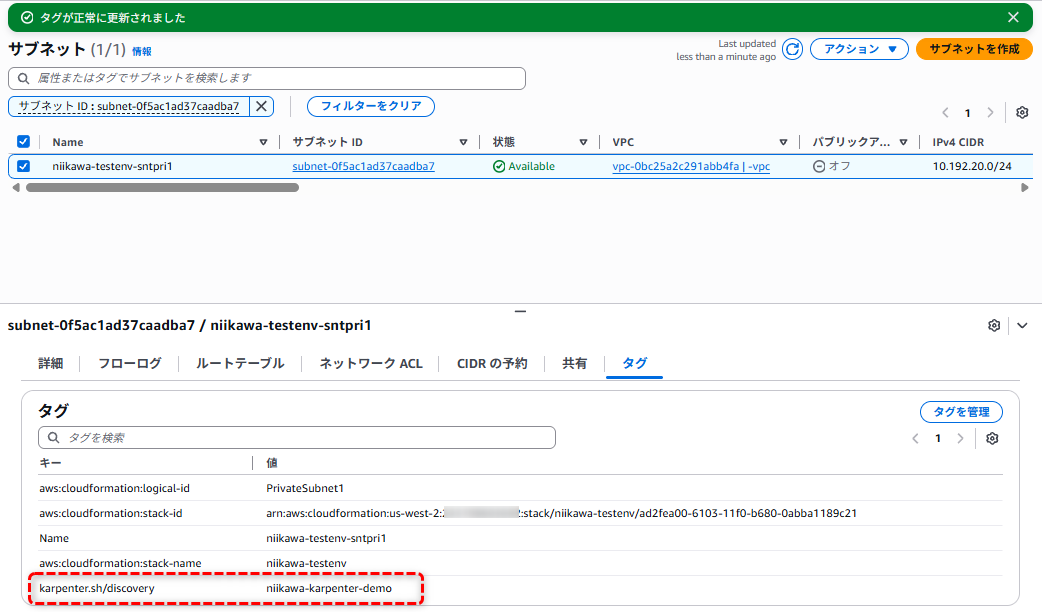

- 今回のハンズオンでは、既存のVPC、サブネットにリソースを配置する設定にしています。既存のサブネットを利用する場合、サブネットにタグ付けが必要になります。

- サブネットにタグ付けを追加します。タグ Key: karpenter.sh/discovery に対し、CLUSTER_NAME を設定します。

- 再度、kubectl apply コマンドを実行します。

~ $ kubectl delete deployment niikawa-testenv

deployment.apps "niikawa-testenv" deleted

~ $ kubectl apply -f karpenter-deployment.yaml

deployment.apps/niikawa-testenv created

~ $ kubectl scale deployment niikawa-testenv --replicas 5

deployment.apps/niikawa-testenv scaled

トラブルシューティング③

- ここからはさらなるトラブルシューティングになります。

- 次のログにある通り、デプロイしたPod が起動に失敗しています。

- kubectl get pods コマンドの STATUS が CrashLoopBackOff です。

- kubectl describe pod コマンドの出力にあるEvents からはノードが不足しており、FailedScheduling の理由でWarning に至ったことが分かります。

- kubectl logs コマンドの結果、nginx のエラーが確認出来ました。権限不足(“Permission denied")のため、mkdir に失敗していることが分かりました。

- セキュリティ向上のため、Dockerイメージ内のアプリケーションをroot以外で実行する目的で「securityContext」のエントリを記載しましたが、nginx はroot で実行する必要がありました。

~ $ kubectl get pods

NAME READY STATUS RESTARTS AGE

niikawa-testenv-775767849d-bzd8s 0/1 CrashLoopBackOff 5 (104s ago) 5m26s

niikawa-testenv-775767849d-d2m9j 0/1 CrashLoopBackOff 5 (2m4s ago) 5m26s

niikawa-testenv-775767849d-dbpkw 0/1 CrashLoopBackOff 5 (116s ago) 5m26s

niikawa-testenv-775767849d-kb9z2 0/1 CrashLoopBackOff 5 (119s ago) 5m26s

niikawa-testenv-775767849d-xw2rf 0/1 CrashLoopBackOff 5 (119s ago) 5m26s

~ $ kubectl describe pod niikawa-testenv-775767849d-bzd8s

Name: niikawa-testenv-775767849d-bzd8s

Namespace: default

Priority: 0

Service Account: default

Node: ip-10-192-11-33.us-west-2.compute.internal/10.192.11.33

Start Time: Tue, 15 Jul 2025 01:22:03 +0000

Labels: app=niikawa-testenv

pod-template-hash=775767849d

Annotations: <none>

Status: Running

IP: 10.192.11.9

IPs:

IP: 10.192.11.9

Controlled By: ReplicaSet/niikawa-testenv-775767849d

Containers:

niikawa-testenv:

Container ID: containerd://86dacd21a084a3a2e86586223bf34578b1582436ac1cce762685ae6367751210

Image: 111111111111.dkr.ecr.us-west-2.amazonaws.com/niikawa-testenv:latest

Image ID: 111111111111.dkr.ecr.us-west-2.amazonaws.com/niikawa-testenv@sha256:9390ddcc28aec8ee8857b41ef38b22dd387b02e1075d89c2b6dc6d4b2e673e07

Port: <none>

Host Port: <none>

State: Waiting

Reason: CrashLoopBackOff

Last State: Terminated

Reason: Error

Exit Code: 1

Started: Tue, 15 Jul 2025 01:25:14 +0000

Finished: Tue, 15 Jul 2025 01:25:14 +0000

Ready: False

Restart Count: 5

Requests:

cpu: 1

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-h7nht (ro)

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

kube-api-access-h7nht:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 5m32s default-scheduler 0/2 nodes are available: 2 Insufficient cpu. preemption: 0/2 nodes are available: 2 No preemption victims found for incoming pod.

Warning FailedScheduling 5m11s default-scheduler 0/3 nodes are available: 1 node(s) had untolerated taint {karpenter.sh/unregistered: }, 2 Insufficient cpu. preemption: 0/3 nodes are available: 1 Preemption is not helpful for scheduling, 2 No preemption victims found for incoming pod.

Warning FailedScheduling 5m10s default-scheduler 0/3 nodes are available: 1 node(s) had untolerated taint {node.cloudprovider.kubernetes.io/uninitialized: true}, 2 Insufficient cpu. preemption: 0/3 nodes are available: 1 Preemption is not helpful for scheduling, 2 No preemption victims found for incoming pod.

Warning FailedScheduling 5m10s default-scheduler 0/3 nodes are available: 1 node(s) had untolerated taint {node.kubernetes.io/not-ready: }, 2 Insufficient cpu. preemption: 0/3 nodes are available: 1 Preemption is not helpful for scheduling, 2 No preemption victims found for incoming pod.

Warning FailedScheduling 5m10s default-scheduler 0/3 nodes are available: 1 node(s) had untolerated taint {node.kubernetes.io/not-ready: }, 2 Insufficient cpu. preemption: 0/3 nodes are available: 1 Preemption is not helpful for scheduling, 2 No preemption victims found for incoming pod.

Normal Scheduled 5m2s default-scheduler Successfully assigned default/niikawa-testenv-775767849d-bzd8s to ip-10-192-11-33.us-west-2.compute.internal

Normal Nominated 5m31s karpenter Pod should schedule on: nodeclaim/default-pj7vh

Normal Pulled 4m59s kubelet Successfully pulled image "111111111111.dkr.ecr.us-west-2.amazonaws.com/niikawa-testenv:latest" in 1.945s (1.945s including waiting). Image size: 73132967 bytes.

Normal Pulled 4m55s kubelet Successfully pulled image "111111111111.dkr.ecr.us-west-2.amazonaws.com/niikawa-testenv:latest" in 256ms (256ms including waiting). Image size: 73132967 bytes.

Normal Pulled 4m44s kubelet Successfully pulled image "111111111111.dkr.ecr.us-west-2.amazonaws.com/niikawa-testenv:latest" in 144ms (144ms including waiting). Image size: 73132967 bytes.

Normal Pulled 4m14s kubelet Successfully pulled image "111111111111.dkr.ecr.us-west-2.amazonaws.com/niikawa-testenv:latest" in 157ms (157ms including waiting). Image size: 73132967 bytes.

Normal Pulled 3m24s kubelet Successfully pulled image "111111111111.dkr.ecr.us-west-2.amazonaws.com/niikawa-testenv:latest" in 112ms (112ms including waiting). Image size: 73132967 bytes.

Normal Pulling 112s (x6 over 5m1s) kubelet Pulling image "111111111111.dkr.ecr.us-west-2.amazonaws.com/niikawa-testenv:latest"

Normal Pulled 112s kubelet Successfully pulled image "111111111111.dkr.ecr.us-west-2.amazonaws.com/niikawa-testenv:latest" in 188ms (188ms including waiting). Image size: 73132967 bytes.

Normal Created 111s (x6 over 4m59s) kubelet Created container: niikawa-testenv

Normal Started 111s (x6 over 4m59s) kubelet Started container niikawa-testenv

Warning BackOff 4s (x23 over 4m55s) kubelet Back-off restarting failed container niikawa-testenv in pod niikawa-testenv-775767849d-bzd8s_default(a1ff9e04-dd4a-474e-b0ad-94a6b02c677f)

~ $ kubectl logs niikawa-testenv-775767849d-bzd8s

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: info: can not modify /etc/nginx/conf.d/default.conf (read-only file system?)

/docker-entrypoint.sh: Sourcing /docker-entrypoint.d/15-local-resolvers.envsh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2025/07/15 01:27:57 [warn] 1#1: the "user" directive makes sense only if the master process runs with super-user privileges, ignored in /etc/nginx/nginx.conf:5

nginx: [warn] the "user" directive makes sense only if the master process runs with super-user privileges, ignored in /etc/nginx/nginx.conf:5

2025/07/15 01:27:57 [emerg] 1#1: mkdir() "/var/cache/nginx/client_temp" failed (13: Permission denied)

nginx: [emerg] mkdir() "/var/cache/nginx/client_temp" failed (13: Permission denied)

~ $

- karpenter-deployment.yaml の securityContext をコメントに戻します。

- 再度、kubectl apply コマンドを実行します。

~ $ kubectl apply -f karpenter-deployment.yaml

deployment.apps/niikawa-testenv configured

~ $ kubectl scale deployment niikawa-testenv --replicas 5

deployment.apps/niikawa-testenv scaled

- 無事に、Pod が起動しました。

~ $ kubectl get pods

NAME READY STATUS RESTARTS AGE

niikawa-testenv-c7b765bb7-l854z 1/1 Running 0 62s

niikawa-testenv-c7b765bb7-lvxb9 1/1 Running 0 62s

niikawa-testenv-c7b765bb7-ncs59 1/1 Running 0 62s

niikawa-testenv-c7b765bb7-p6m4b 1/1 Running 0 62s

niikawa-testenv-c7b765bb7-s78v5 1/1 Running 0 62s

~ $

~ $ kubectl describe pod niikawa-testenv-c7b765bb7-l854z

Name: niikawa-testenv-c7b765bb7-l854z

Namespace: default

Priority: 0

Service Account: default

Node: ip-10-192-10-110.us-west-2.compute.internal/10.192.10.110

Start Time: Tue, 15 Jul 2025 01:34:21 +0000

Labels: app=niikawa-testenv

pod-template-hash=c7b765bb7

Annotations: <none>

Status: Running

IP: 10.192.10.184

IPs:

IP: 10.192.10.184

Controlled By: ReplicaSet/niikawa-testenv-c7b765bb7

Containers:

niikawa-testenv:

Container ID: containerd://8ec14bef8c7d1232f6d714a6a55f2a88183e906229500429055878bbc62faf0d

Image: 111111111111.dkr.ecr.us-west-2.amazonaws.com/niikawa-testenv:latest

Image ID: 111111111111.dkr.ecr.us-west-2.amazonaws.com/niikawa-testenv@sha256:9390ddcc28aec8ee8857b41ef38b22dd387b02e1075d89c2b6dc6d4b2e673e07

Port: <none>

Host Port: <none>

State: Running

Started: Tue, 15 Jul 2025 01:34:24 +0000

Ready: True

Restart Count: 0

Requests:

cpu: 1

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-qccww (ro)

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-qccww:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 87s default-scheduler 0/3 nodes are available: 1 node(s) had untolerated taint {karpenter.sh/disrupted: }, 2 Insufficient cpu. preemption: 0/3 nodes are available: 1 Preemption is not helpful for scheduling, 2 No preemption victims found for incoming pod.

Warning FailedScheduling 66s default-scheduler 0/4 nodes are available: 1 node(s) had untolerated taint {karpenter.sh/disrupted: }, 1 node(s) had untolerated taint {node.cloudprovider.kubernetes.io/uninitialized: true}, 2 Insufficient cpu. preemption: 0/4 nodes are available: 2 No preemption victims found for incoming pod, 2 Preemption is not helpful for scheduling.

Warning FailedScheduling 66s default-scheduler 0/4 nodes are available: 1 node(s) had untolerated taint {karpenter.sh/disrupted: }, 1 node(s) had untolerated taint {node.kubernetes.io/not-ready: }, 2 Insufficient cpu. preemption: 0/4 nodes are available: 2 No preemption victims found for incoming pod, 2 Preemption is not helpful for scheduling.

Warning FailedScheduling 64s default-scheduler 0/4 nodes are available: 1 node(s) had untolerated taint {karpenter.sh/disrupted: }, 1 node(s) had untolerated taint {node.kubernetes.io/not-ready: }, 2 Insufficient cpu. preemption: 0/4 nodes are available: 2 No preemption victims found for incoming pod, 2 Preemption is not helpful for scheduling.

Normal Scheduled 57s default-scheduler Successfully assigned default/niikawa-testenv-c7b765bb7-l854z to ip-10-192-10-110.us-west-2.compute.internal

Normal Nominated 86s karpenter Pod should schedule on: nodeclaim/default-d5hkp

Normal Pulling 57s kubelet Pulling image "111111111111.dkr.ecr.us-west-2.amazonaws.com/niikawa-testenv:latest"

Normal Pulled 55s kubelet Successfully pulled image "111111111111.dkr.ecr.us-west-2.amazonaws.com/niikawa-testenv:latest" in 2.055s (2.055s including waiting). Image size: 73132967 bytes.

Normal Created 55s kubelet Created container: niikawa-testenv

Normal Started 55s kubelet Started container niikawa-testenv

~ $

ハンズオン6:Cloudshell から動作確認

Cloudshell VPC Environment を設定

- ここまでは、Cloudshell をインターネットへ接続できる標準の方法で使用しておりました。ここからは、VPC 内のリソースへアクセスするため、VPC 内にCloudshell を配置するVPC Environment を設定します。

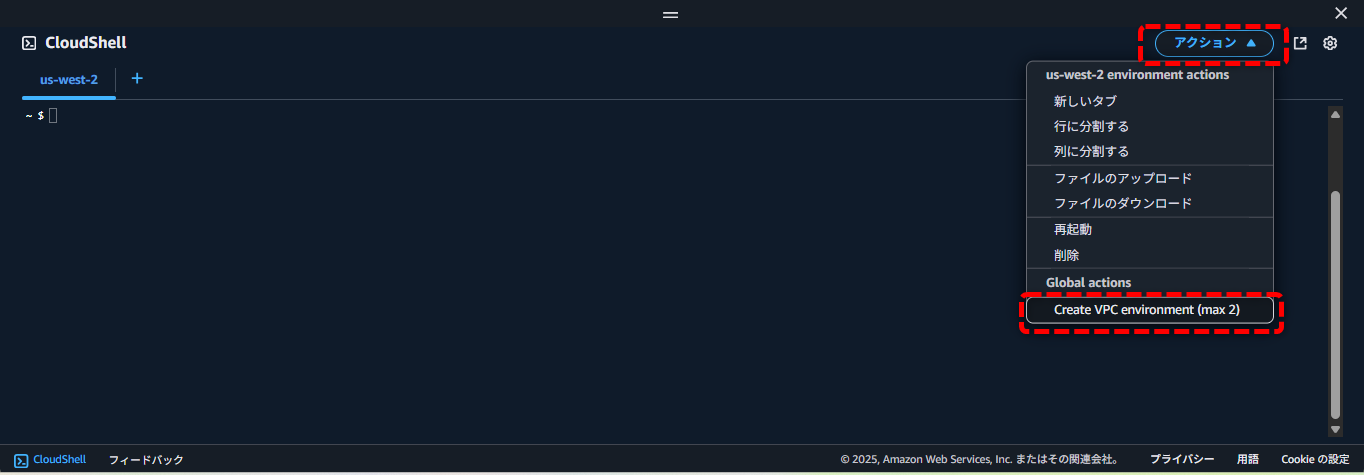

- 「アクション」→「Create VPC environment」を選択します。

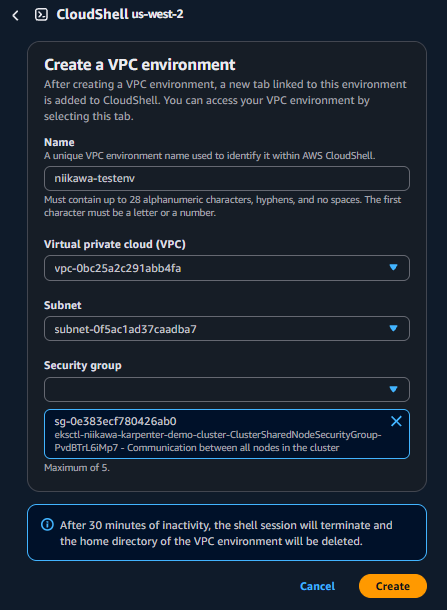

- VPC Environment の環境名、配置するVPC、Subnet、割り当てるセキュリティグループを指定します。「Create」を選択します。

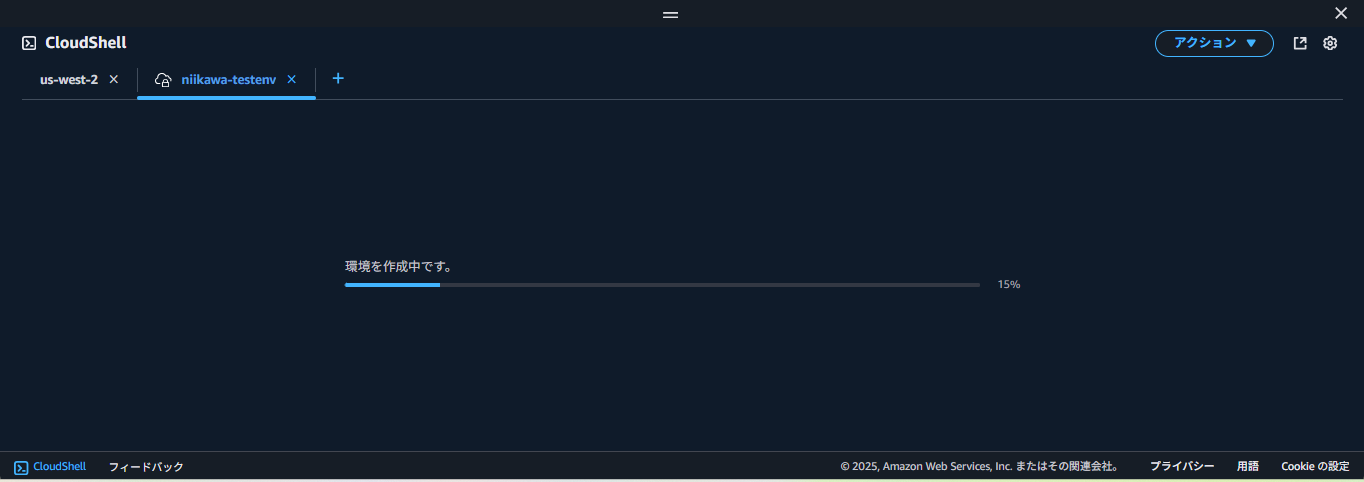

- VPC Environment の環境を作成中です。

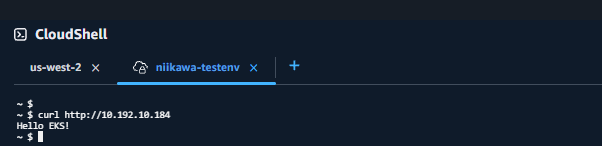

- 赤枠が示す通り、Cloudshell VPC Environment はVPC 内に配置されています。

Cloudshell から疎通確認

- 次に、VPC Environment を使用して、疎通確認を行います。curl コマンドに Pod のIPアドレスを指定して、疎通コマンドを実行します。

- 無事に、「Hello EKS!」が出力されることを確認しました。

ハンズオン7:コントロールプレーンのプライベート化

VPC エンドポイントの作成

- 以下のVPC エンドポイントを作成します。作成手順は、省略します。

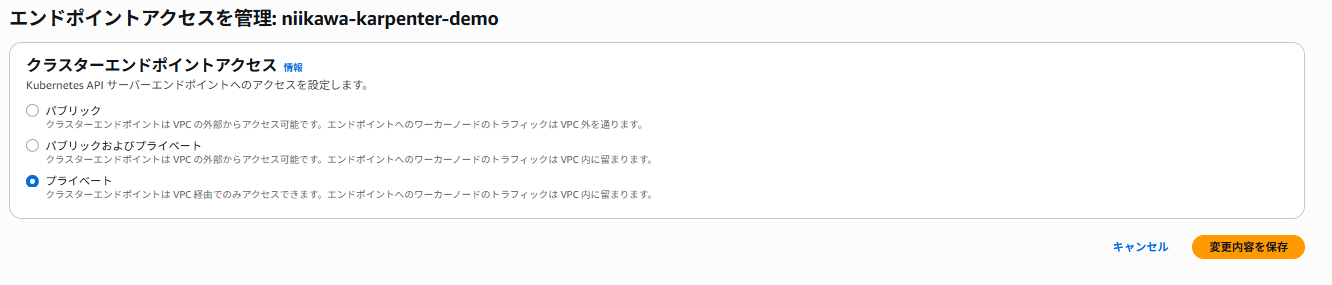

クラスタのエンドポイントアクセスをプライベートに変更

- EKS のコンソール画面から、「API サーバーエンドポイント」が「パブリック」であることを確認します。

- 「ネットワーキング」→「管理」から「エンドポイントアクセスを管理」を設定します。

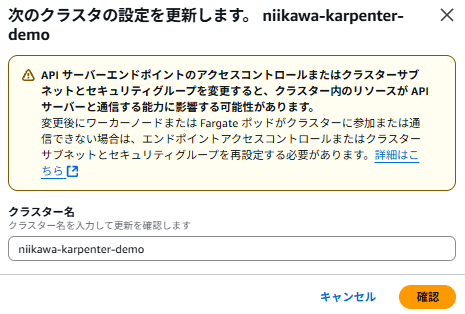

- 「プライベート」を選択し、「変更内容を保存」を押します。

- 確認画面です。

- 設定変更後、「API サーバーエンドポイント」が「プライベート」に変わったことを確認します。

- 念のため、CloudShell から疎通が通るようにセキュリティグループを変更します。

Cloudshell から疎通確認

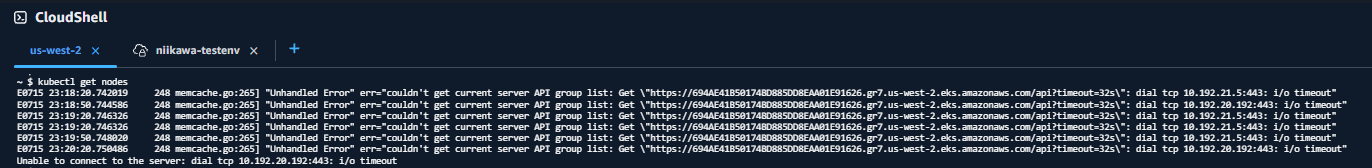

- 標準のCloudShell を利用した場合、エンドポイントのプライベート化後に「i/o timeout」となり、接続できません。

~ $ kubectl get nodes

E0715 23:18:20.742019 248 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://694AE41B50174BD885DD8EAA01E91626.gr7.us-west-2.eks.amazonaws.com/api?timeout=32s\": dial tcp 10.192.21.5:443: i/o timeout"

E0715 23:18:50.744586 248 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://694AE41B50174BD885DD8EAA01E91626.gr7.us-west-2.eks.amazonaws.com/api?timeout=32s\": dial tcp 10.192.20.192:443: i/o timeout"

E0715 23:19:20.746326 248 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://694AE41B50174BD885DD8EAA01E91626.gr7.us-west-2.eks.amazonaws.com/api?timeout=32s\": dial tcp 10.192.21.5:443: i/o timeout"

E0715 23:19:50.748020 248 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://694AE41B50174BD885DD8EAA01E91626.gr7.us-west-2.eks.amazonaws.com/api?timeout=32s\": dial tcp 10.192.21.5:443: i/o timeout"

E0715 23:20:20.750486 248 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"https://694AE41B50174BD885DD8EAA01E91626.gr7.us-west-2.eks.amazonaws.com/api?timeout=32s\": dial tcp 10.192.20.192:443: i/o timeout"

Unable to connect to the server: dial tcp 10.192.20.192:443: i/o timeout

- CloudShell VPC Environment からは接続可能です。ただし初回、「connection refused」と出力され、aws eks update-kubeconfig コマンドを実行する必要があります。

~ $ kubectl get nodes

E0715 23:17:28.120468 239 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"http://localhost:8080/api?timeout=32s\": dial tcp 127.0.0.1:8080: connect: connection refused"

E0715 23:17:28.122253 239 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"http://localhost:8080/api?timeout=32s\": dial tcp 127.0.0.1:8080: connect: connection refused"

E0715 23:17:28.123939 239 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"http://localhost:8080/api?timeout=32s\": dial tcp 127.0.0.1:8080: connect: connection refused"

E0715 23:17:28.125620 239 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"http://localhost:8080/api?timeout=32s\": dial tcp 127.0.0.1:8080: connect: connection refused"

E0715 23:17:28.127088 239 memcache.go:265] "Unhandled Error" err="couldn't get current server API group list: Get \"http://localhost:8080/api?timeout=32s\": dial tcp 127.0.0.1:8080: connect: connection refused"

The connection to the server localhost:8080 was refused - did you specify the right host or port?

~ $

~ $ eksctl get cluster

NAME REGION EKSCTL CREATED

niikawa-karpenter-demo us-west-2 True

~ $

~ $ aws eks update-kubeconfig --region us-west-2 --name niikawa-karpenter-demo

Added new context arn:aws:eks:us-west-2:111111111111:cluster/niikawa-karpenter-demo to /home/cloudshell-user/.kube/config

~ $

~ $ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-10-192-10-110.us-west-2.compute.internal Ready 21h v1.33.0-eks-802817d

ip-10-192-20-142.us-west-2.compute.internal Ready 23h v1.33.0-eks-802817d

ip-10-192-21-220.us-west-2.compute.internal Ready 23h v1.33.0-eks-802817d

最後に

- お疲れ様でした。以上で、Amazon EKS + Karpenter のハンズオンは終了です。EKS、Karpenter のどちらも機能やカスタマイズできる設定が多いため、引き続きEKS にチャレンジしてみてください。

- このハンズオンで作成したEKSクラスタおよび関連するリソース、NAT Gatewayなどは、起動しているだけでコストが発生します。学習が終わったら、リソースの削除を忘れずに。CloudFormationスタック削除が簡単な方法になります。

- この記事が、皆さんのAWS学習の助けとなれば幸いです。