Terraform import ブロックによる EC2, S3, Lambda のインポート

Contents

概要

- 今月、Terraform import ブロックを使用する作業がありましたので、事前に Terraform import ブロックで試したことを記事にします。

- これまでは、既存のリソースを Terraform で管理する場合に、

terraform importコマンドを使用する必要がありました。terraform import コマンドは、リソースを一度に1つずつインポートする制限や一致させるリソースの tfコードを手動で記述する必要がありました。今回使用するTerraform import ブロックは、Terraform 1.5.0 からの新機能です。 - 今回は、EC2 のリソースをインポートする確認と、S3, Lambda および S3 Notification (イベント通知) のリソースをインポートする確認を行いました。

準備

- 私のテスト環境(Ubuntu on WSL2) は、Terraform v1.7.5 を使用しています。Terraform は、tfenv を使用してインストールしています。Ubuntuに tfenv を導入する方法は、こちらの記事を参照。

$ tfenv list

* 1.7.5 (set by /home/linuxbrew/.linuxbrew/Cellar/tfenv/3.0.0/version)

$ terraform -version

Terraform v1.7.5

on linux_amd64

Terraform import ブロックによるEC2 リソースのインポート

- Terraform で管理されていない既存のリソースを準備します。AWS コンソールから、以下のEC2 インスタンスを作成しました。

- import ブロックを記述した tfファイル (例: import.tf) を準備しました。import ブロックには、id, to を指定します。id はAWSリソースのid を指定し、to は[resourceブロックのtype].[resourceブロックに定義する name]を指定します。また、今回は、以下の通り、id には変数を使用しました。import ブロックの id に文字列リテラル以外を指定するエンハンスは、Terraform v1.6.0 からになります。

# ----------------------------------

# import block

# ----------------------------------

import {

id = var.ec2_instance.ec2_instance_1

to = aws_instance.imported_resources_1

}- variables.tf に変数の定義を行います。ここで、AWSリソースのid を指定します。

variable "ec2_instance" {

type = map(string)

default = {

ec2_instance_1 = "i-0ba7a1f0f1b4358f1"

}

}- terraform init を実行します。

$ terraform init

Initializing the backend...

Initializing provider plugins...

- Reusing previous version of hashicorp/aws from the dependency lock file

- Using previously-installed hashicorp/aws v5.44.0

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.- terraform plan を実行します。以下のエラーが表示されました。

-

Error: Configuration for import target does not exist

-

- resourceブロックを自動的に生成する場合は、plan にgenerate-config-out オプションが必要とのことです。

$ terraform plan

╷

│ Error: Configuration for import target does not exist

│

│ on import.tf line 6, in import:

│ 6: to = aws_instance.imported_resources_1

│

│ The configuration for the given import target aws_instance.imported_resources_1 does not exist. If you wish to automatically generate config for this resource, use the -generate-config-out option within terraform

│ plan. Otherwise, make sure the target resource exists within your configuration. For example:

│

│ terraform plan -generate-config-out=generated.tf

╵

- 次は、terraform plan にgenerate-config-out オプションを指定して実行します。

$ terraform plan -generate-config-out=main.tf

aws_instance.imported_resources_1: Preparing import... [id=i-0ba7a1f0f1b4358f1]

aws_instance.imported_resources_1: Refreshing state... [id=i-0ba7a1f0f1b4358f1]

Planning failed. Terraform encountered an error while generating this plan.

╷

│ Warning: Config generation is experimental

│

│ Generating configuration during import is currently experimental, and the generated configuration format may change in future versions.

╵

╷

│ Error: Conflicting configuration arguments

│

│ with aws_instance.imported_resources_1,

│ on main.tf line 14:

│ (source code not available)

│

│ "ipv6_address_count": conflicts with ipv6_addresses

╵

╷

│ Error: Conflicting configuration arguments

│

│ with aws_instance.imported_resources_1,

│ on main.tf line 15:

│ (source code not available)

│

│ "ipv6_addresses": conflicts with ipv6_address_count

╵- 結果として、自動的に main.tf ファイルが生成されましたが、以下のエラーが表示されています。どうやら、ipv6_addresses, ipv6_address_count がコンフリクトしている様です。

- Error: Conflicting configuration arguments

- main.tf を手動で修正します。ipv6_addresses をコメントアウトしました。

# __generated__ by Terraform

resource "aws_instance" "imported_resources_1" {

** 省略 **

ipv6_address_count = 0

# ipv6_addresses = []- 再度、terraform plan を実行します。次は、エラーは表示されません。「1 to import, 0 to add, 0 to change, 0 to destroy.」が表すように、1つのリソースがインポートされます。

$ terraform plan

aws_instance.imported_resources_1: Preparing import... [id=i-0ba7a1f0f1b4358f1]

aws_instance.imported_resources_1: Refreshing state... [id=i-0ba7a1f0f1b4358f1]

Terraform will perform the following actions:

# aws_instance.imported_resources_1 will be imported

resource "aws_instance" "imported_resources_1" {

ami = "ami-xxxxxxxxxxxxxxxxx"

arn = "arn:aws:ec2:ap-northeast-1:111111111111:instance/i-0ba7a1f0f1b4358f1"

associate_public_ip_address = true

availability_zone = "ap-northeast-1c"

cpu_core_count = 1

cpu_threads_per_core = 1

disable_api_stop = false

disable_api_termination = false

ebs_optimized = false

get_password_data = false

hibernation = false

iam_instance_profile = "test-role"

id = "i-0ba7a1f0f1b4358f1"

instance_initiated_shutdown_behavior = "stop"

instance_state = "running"

instance_type = "t2.micro"

ipv6_address_count = 0

ipv6_addresses = []

key_name = "test-key"

monitoring = false

placement_partition_number = 0

primary_network_interface_id = "eni-xxxxxxxxxxxxxxxxx"

private_dns = "ip-xx-xx-xx-xx.ap-northeast-1.compute.internal"

private_ip = "xx.xx.xx.xx"

public_dns = "ec2-xx-xx-xx-xx.ap-northeast-1.compute.amazonaws.com"

public_ip = "xx.xx.xx.xx"

secondary_private_ips = []

security_groups = [

"test-sgp",

]

source_dest_check = true

subnet_id = "subnet-xxxxxxxx"

tags = {

"Name" = "niikawa-ec2"

}

tags_all = {

"Name" = "niikawa-ec2"

}

tenancy = "default"

vpc_security_group_ids = [

"sg-xxxxxxxxxxxxxxxxx",

]

capacity_reservation_specification {

capacity_reservation_preference = "open"

}

cpu_options {

core_count = 1

threads_per_core = 1

}

credit_specification {

cpu_credits = "standard"

}

enclave_options {

enabled = false

}

maintenance_options {

auto_recovery = "default"

}

metadata_options {

http_endpoint = "enabled"

http_protocol_ipv6 = "disabled"

http_put_response_hop_limit = 2

http_tokens = "required"

instance_metadata_tags = "disabled"

}

private_dns_name_options {

enable_resource_name_dns_a_record = true

enable_resource_name_dns_aaaa_record = false

hostname_type = "ip-name"

}

root_block_device {

delete_on_termination = true

device_name = "/dev/xvda"

encrypted = false

iops = 3000

tags = {}

tags_all = {}

throughput = 125

volume_id = "vol-xxxxxxxxxxxxxxxxx"

volume_size = 8

volume_type = "gp3"

}

}

Plan: 1 to import, 0 to add, 0 to change, 0 to destroy.

──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Note: You didn't use the -out option to save this plan, so Terraform can't guarantee to take exactly these actions if you run "terraform apply" now.

- それでは、terraform apply を実行します。実行結果は、「1 to import, 0 to add, 0 to change, 0 to destroy.」となり、1つのリソースがインポートされました。

$ terraform apply

aws_instance.imported_resources_1: Preparing import... [id=i-0ba7a1f0f1b4358f1]

aws_instance.imported_resources_1: Refreshing state... [id=i-0ba7a1f0f1b4358f1]

Terraform will perform the following actions:

# aws_instance.imported_resources_1 will be imported

resource "aws_instance" "imported_resources_1" {

** 省略 **

}

Plan: 1 to import, 0 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

aws_instance.imported_resources_1: Importing... [id=i-0ba7a1f0f1b4358f1]

aws_instance.imported_resources_1: Import complete [id=i-0ba7a1f0f1b4358f1]

Apply complete! Resources: 1 imported, 0 added, 0 changed, 0 destroyed.

S3, Lambda および S3 Notification リソースのインポート

- 次は、Terraform import ブロックによるS3, Lambda および S3 Notification (イベント通知) のリソースインポートです。

- Terraform で管理されていない既存のリソースを準備します。

- AWS コンソールから、以下のS3 バケットを作成しました。

- 次に、Lambda 関数を作成しました。

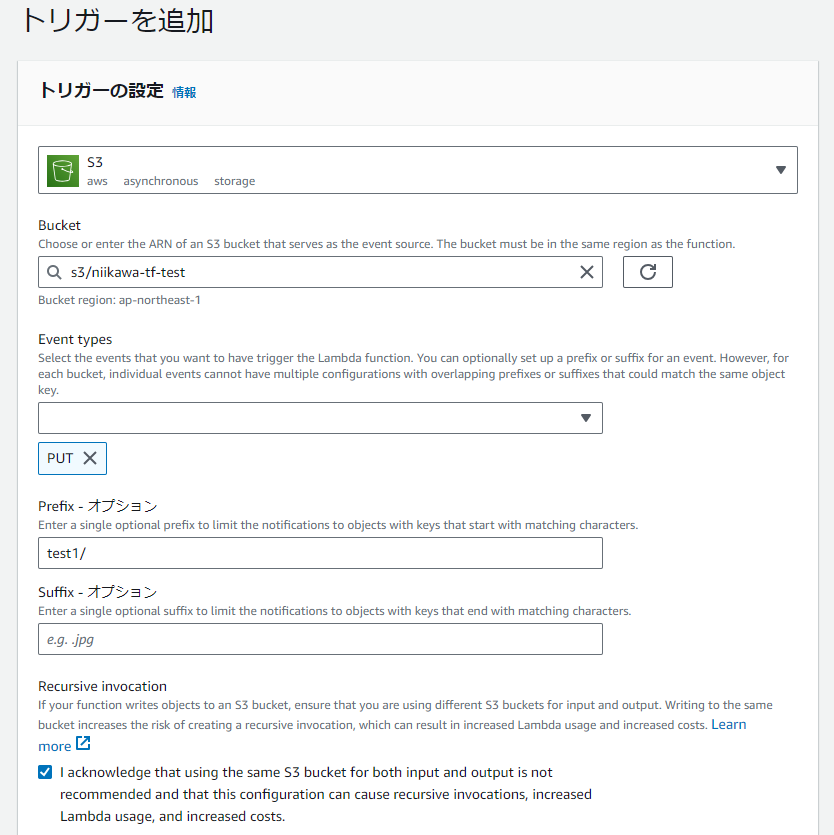

- 最後に、Lambda に、S3 トリガーを設定しました。

- import ブロックを記述した tfファイル (例: import.tf) を準備します。import ブロックには、id, to を指定します。id はAWSリソースのid を指定し、to は[resourceブロックのtype].[resourceブロックに定義する name]を指定します。また、今回は、以下の通り、id には変数を使用しました。import ブロックの id に文字列リテラル以外を指定するエンハンスは、Terraform v1.6.0 からになります。

# ----------------------------------

# import block

# ----------------------------------

import {

id = var.bucket_name.bucket_name_1

to = aws_s3_bucket.imported_resources_1

}

import {

id = var.bucket_name.bucket_name_1

to = aws_s3_bucket_notification.imported_resources_1

}

import {

id = var.lambda_function.lambda_function_1

to = aws_lambda_function.imported_resources_1

}- variables.tf に変数の定義を行います。ここで、AWSリソース S3バケット, Lambda関数の名前を指定します。

variable "bucket_name" {

type = map(string)

default = {

bucket_name_1 = "niikawa-tf-test"

}

}

variable "lambda_function" {

type = map(string)

default = {

lambda_function_1 = "niikawa-tf-lambda"

}

}- terraform init を実行します。

$ terraform init

Initializing the backend...

Initializing provider plugins...

- Reusing previous version of hashicorp/aws from the dependency lock file

- Using previously-installed hashicorp/aws v5.44.0

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.- terraform plan を実行します。以下のエラーが表示されました。

-

Error: Configuration for import target does not exist

-

- resourceブロックを自動的に生成する場合は、plan にgenerate-config-out オプションが必要とのことです。

$ terraform plan

╷

│ Error: Configuration for import target does not exist

│

│ on import.tf line 6, in import:

│ 6: to = aws_s3_bucket.imported_resources_1

│

│ The configuration for the given import target aws_s3_bucket.imported_resources_1 does not exist. If you wish to automatically generate config for this resource, use the -generate-config-out option within

│ terraform plan. Otherwise, make sure the target resource exists within your configuration. For example:

│

│ terraform plan -generate-config-out=generated.tf

╵

╷

│ Error: Configuration for import target does not exist

│

│ on import.tf line 11, in import:

│ 11: to = aws_s3_bucket_notification.imported_resources_1

│

│ The configuration for the given import target aws_s3_bucket_notification.imported_resources_1 does not exist. If you wish to automatically generate config for this resource, use the -generate-config-out option

│ within terraform plan. Otherwise, make sure the target resource exists within your configuration. For example:

│

│ terraform plan -generate-config-out=generated.tf

╵

╷

│ Error: Configuration for import target does not exist

│

│ on import.tf line 16, in import:

│ 16: to = aws_lambda_function.imported_resources_1

│

│ The configuration for the given import target aws_lambda_function.imported_resources_1 does not exist. If you wish to automatically generate config for this resource, use the -generate-config-out option within

│ terraform plan. Otherwise, make sure the target resource exists within your configuration. For example:

│

│ terraform plan -generate-config-out=generated.tf

╵- 次は、terraform plan にgenerate-config-out オプションを指定して実行します。

$ terraform plan -generate-config-out=main.tf

aws_s3_bucket_notification.imported_resources_1: Preparing import... [id=niikawa-tf-test]

aws_s3_bucket.imported_resources_1: Preparing import... [id=niikawa-tf-test]

aws_lambda_function.imported_resources_1: Preparing import... [id=niikawa-tf-lambda]

aws_s3_bucket_notification.imported_resources_1: Refreshing state... [id=niikawa-tf-test]

aws_s3_bucket.imported_resources_1: Refreshing state... [id=niikawa-tf-test]

aws_lambda_function.imported_resources_1: Refreshing state... [id=niikawa-tf-lambda]

Terraform planned the following actions, but then encountered a problem:

# aws_s3_bucket.imported_resources_1 will be imported

# (config will be generated)

resource "aws_s3_bucket" "imported_resources_1" {

arn = "arn:aws:s3:::niikawa-tf-test"

bucket = "niikawa-tf-test"

bucket_domain_name = "niikawa-tf-test.s3.amazonaws.com"

bucket_regional_domain_name = "niikawa-tf-test.s3.ap-northeast-1.amazonaws.com"

hosted_zone_id = "ZZZZZZZZZZZZZZ"

id = "niikawa-tf-test"

object_lock_enabled = false

region = "ap-northeast-1"

request_payer = "BucketOwner"

tags = {}

tags_all = {}

grant {

id = "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

permissions = [

"FULL_CONTROL",

]

type = "CanonicalUser"

}

server_side_encryption_configuration {

rule {

bucket_key_enabled = true

apply_server_side_encryption_by_default {

sse_algorithm = "AES256"

}

}

}

versioning {

enabled = false

mfa_delete = false

}

}

# aws_s3_bucket_notification.imported_resources_1 will be imported

# (config will be generated)

resource "aws_s3_bucket_notification" "imported_resources_1" {

bucket = "niikawa-tf-test"

eventbridge = false

id = "niikawa-tf-test"

lambda_function {

events = [

"s3:ObjectCreated:Put",

]

filter_prefix = "test1/"

id = "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx"

lambda_function_arn = "arn:aws:lambda:ap-northeast-1:111111111111:function:niikawa-tf-lambda"

}

}

Plan: 2 to import, 0 to add, 0 to change, 0 to destroy.

╷

│ Warning: Config generation is experimental

│

│ Generating configuration during import is currently experimental, and the generated configuration format may change in future versions.

╵

╷

│ Error: Invalid combination of arguments

│

│ with aws_lambda_function.imported_resources_1,

│ on main.tf line 4:

│ (source code not available)

│

│ "filename": one of `filename,image_uri,s3_bucket` must be specified

╵

╷

│ Error: Invalid combination of arguments

│

│ with aws_lambda_function.imported_resources_1,

│ on main.tf line 7:

│ (source code not available)

│

│ "image_uri": one of `filename,image_uri,s3_bucket` must be specified

╵

╷

│ Error: Invalid combination of arguments

│

│ with aws_lambda_function.imported_resources_1,

│ on main.tf line 16:

│ (source code not available)

│

│ "s3_bucket": one of `filename,image_uri,s3_bucket` must be specified

╵- 結果として、自動的に main.tf ファイルが生成されましたが、以下のエラーが表示されています。コードをデプロイするため、filename,image_uri,s3_bucket いずれかを指定する必要があります。

- Error: Invalid combination of arguments

- 今回は、Archive Provider を使用して、terraform がzipファイルを仕込みを行います。src フォルダ、output_path フォルダを作成し、src フォルダにデプロイするコード (例: tr_lambda.py)を配置します。

- main.tf を手動で修正します。以下のdata ブロック を追記しました。

data "archive_file" "tr_lambda" {

type = "zip"

source_dir = "src"

output_path = "upload/lambda.zip"

}- 続いて、filename, source_code_hash を編集します。

# __generated__ by Terraform

resource "aws_lambda_function" "imported_resources_1" {

architectures = ["x86_64"]

code_signing_config_arn = null

description = null

# filename = null

filename = data.archive_file.tr_lambda.output_path

function_name = "niikawa-tf-lambda"

handler = "lambda_function.lambda_handler"

image_uri = null

kms_key_arn = null

layers = []

memory_size = 128

package_type = "Zip"

publish = null

reserved_concurrent_executions = -1

role = "arn:aws:iam::111111111111:role/test-role"

runtime = "python3.12"

s3_bucket = null

s3_key = null

s3_object_version = null

skip_destroy = false

# source_code_hash = "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx="

source_code_hash = data.archive_file.tr_lambda.output_base64sha256- terraform plan を実行します。次は、lock file の依存関係に関するエラーが出ちゃいました。

- Error: Inconsistent dependency lock file

$ terraform plan

╷

│ Error: Inconsistent dependency lock file

│

│ The following dependency selections recorded in the lock file are inconsistent with the current configuration:

│ - provider registry.terraform.io/hashicorp/archive: required by this configuration but no version is selected

│

│ To update the locked dependency selections to match a changed configuration, run:

│ terraform init -upgrade

╵- エラーに記載されている terraform init -upgrade を実行します。

$ terraform init -upgrade

Initializing the backend...

Initializing provider plugins...

- Finding latest version of hashicorp/aws...

- Finding latest version of hashicorp/archive...

- Installing hashicorp/archive v2.4.2...

- Installed hashicorp/archive v2.4.2 (signed by HashiCorp)

- Using previously-installed hashicorp/aws v5.44.0

Terraform has made some changes to the provider dependency selections recorded

in the .terraform.lock.hcl file. Review those changes and commit them to your

version control system if they represent changes you intended to make.

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.- 再度、terraform plan を実行します。次は、エラーは表示されません。「Plan: 3 to import, 0 to add, 1 to change, 0 to destroy.」となり、3つのリソースがインポートされ、1つの変更が発生します。変更点はコードのデプロイに関する部分になります。

$ terraform plan

data.archive_file.tr_lambda: Reading...

data.archive_file.tr_lambda: Read complete after 0s [id=xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx]

aws_s3_bucket.imported_resources_1: Preparing import... [id=niikawa-tf-test]

aws_s3_bucket_notification.imported_resources_1: Preparing import... [id=niikawa-tf-test]

aws_lambda_function.imported_resources_1: Preparing import... [id=niikawa-tf-lambda]

aws_s3_bucket_notification.imported_resources_1: Refreshing state... [id=niikawa-tf-test]

aws_lambda_function.imported_resources_1: Refreshing state... [id=niikawa-tf-lambda]

aws_s3_bucket.imported_resources_1: Refreshing state... [id=niikawa-tf-test]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

~ update in-place

Terraform will perform the following actions:

# aws_lambda_function.imported_resources_1 will be updated in-place

# (imported from "niikawa-tf-lambda")

~ resource "aws_lambda_function" "imported_resources_1" {

architectures = [

"x86_64",

]

arn = "arn:aws:lambda:ap-northeast-1:111111111111:function:niikawa-tf-lambda"

+ filename = "upload/lambda.zip"

function_name = "niikawa-tf-lambda"

handler = "lambda_function.lambda_handler"

id = "niikawa-tf-lambda"

invoke_arn = "arn:aws:apigateway:ap-northeast-1:lambda:path/2015-03-31/functions/arn:aws:lambda:ap-northeast-1:111111111111:function:niikawa-tf-lambda/invocations"

~ last_modified = "2024-04-08T15:33:54.942+0000" -> (known after apply)

layers = []

memory_size = 128

package_type = "Zip"

+ publish = false

qualified_arn = "arn:aws:lambda:ap-northeast-1:111111111111:function:niikawa-tf-lambda:$LATEST"

qualified_invoke_arn = "arn:aws:apigateway:ap-northeast-1:lambda:path/2015-03-31/functions/arn:aws:lambda:ap-northeast-1:111111111111:function:niikawa-tf-lambda:$LATEST/invocations"

reserved_concurrent_executions = -1

role = "arn:aws:iam::111111111111:role/test-role"

runtime = "python3.12"

skip_destroy = false

~ source_code_hash = "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx=" -> "yyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyy="

source_code_size = 341

tags = {}

tags_all = {}

timeout = 3

version = "$LATEST"

ephemeral_storage {

size = 512

}

logging_config {

log_format = "Text"

log_group = "/aws/lambda/niikawa-tf-lambda"

}

tracing_config {

mode = "PassThrough"

}

}

# aws_s3_bucket.imported_resources_1 will be imported

resource "aws_s3_bucket" "imported_resources_1" {

arn = "arn:aws:s3:::niikawa-tf-test"

bucket = "niikawa-tf-test"

bucket_domain_name = "niikawa-tf-test.s3.amazonaws.com"

bucket_regional_domain_name = "niikawa-tf-test.s3.ap-northeast-1.amazonaws.com"

hosted_zone_id = "ZZZZZZZZZZZZZZ"

id = "niikawa-tf-test"

object_lock_enabled = false

region = "ap-northeast-1"

request_payer = "BucketOwner"

tags = {}

tags_all = {}

grant {

id = "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

permissions = [

"FULL_CONTROL",

]

type = "CanonicalUser"

}

server_side_encryption_configuration {

rule {

bucket_key_enabled = true

apply_server_side_encryption_by_default {

sse_algorithm = "AES256"

}

}

}

versioning {

enabled = false

mfa_delete = false

}

}

# aws_s3_bucket_notification.imported_resources_1 will be imported

resource "aws_s3_bucket_notification" "imported_resources_1" {

bucket = "niikawa-tf-test"

eventbridge = false

id = "niikawa-tf-test"

lambda_function {

events = [

"s3:ObjectCreated:Put",

]

filter_prefix = "test1/"

id = "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx"

lambda_function_arn = "arn:aws:lambda:ap-northeast-1:111111111111:function:niikawa-tf-lambda"

}

}

Plan: 3 to import, 0 to add, 1 to change, 0 to destroy.

──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Note: You didn't use the -out option to save this plan, so Terraform can't guarantee to take exactly these actions if you run "terraform apply" now.

- それでは、terraform apply を実行します。実行結果は、「3 imported, 0 added, 1 changed, 0 destroyed.」となり、無事に3つのリソースがインポートされました。

$ terraform apply

data.archive_file.tr_lambda: Reading...

data.archive_file.tr_lambda: Read complete after 0s [id=xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx]

aws_s3_bucket_notification.imported_resources_1: Preparing import... [id=niikawa-tf-test]

aws_s3_bucket.imported_resources_1: Preparing import... [id=niikawa-tf-test]

aws_lambda_function.imported_resources_1: Preparing import... [id=niikawa-tf-lambda]

aws_s3_bucket_notification.imported_resources_1: Refreshing state... [id=niikawa-tf-test]

aws_s3_bucket.imported_resources_1: Refreshing state... [id=niikawa-tf-test]

aws_lambda_function.imported_resources_1: Refreshing state... [id=niikawa-tf-lambda]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

~ update in-place

Terraform will perform the following actions:

# aws_lambda_function.imported_resources_1 will be updated in-place

# (imported from "niikawa-tf-lambda")

~ resource "aws_lambda_function" "imported_resources_1" {

** 省略 **

}

# aws_s3_bucket.imported_resources_1 will be imported

resource "aws_s3_bucket" "imported_resources_1" {

** 省略 **

}

# aws_s3_bucket_notification.imported_resources_1 will be imported

resource "aws_s3_bucket_notification" "imported_resources_1" {

** 省略 **

}

Plan: 3 to import, 0 to add, 1 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

aws_s3_bucket_notification.imported_resources_1: Importing... [id=niikawa-tf-test]

aws_s3_bucket_notification.imported_resources_1: Import complete [id=niikawa-tf-test]

aws_lambda_function.imported_resources_1: Importing... [id=niikawa-tf-lambda]

aws_lambda_function.imported_resources_1: Import complete [id=niikawa-tf-lambda]

aws_s3_bucket.imported_resources_1: Importing... [id=niikawa-tf-test]

aws_s3_bucket.imported_resources_1: Import complete [id=niikawa-tf-test]

aws_lambda_function.imported_resources_1: Modifying... [id=niikawa-tf-lambda]

aws_lambda_function.imported_resources_1: Modifications complete after 5s [id=niikawa-tf-lambda]

Apply complete! Resources: 3 imported, 0 added, 1 changed, 0 destroyed.- 最後に確認のため、terraform state list を実行します。Terraform でS3, Lambda および S3 Notification (イベント通知) のリソースが管理されていることが分かります。

$ terraform state list

data.archive_file.tr_lambda

aws_lambda_function.imported_resources_1

aws_s3_bucket.imported_resources_1

aws_s3_bucket_notification.imported_resources_1

参考資料